Comprehensive end-to-end microbiome analysis using QIIME 2¶

Title: QIIME 2 enables comprehensive end-to-end analysis of diverse microbiome data and comparative studies with publicly available data

Running Title: Comprehensive end-to-end microbiome analysis using QIIME 2

Authors: Mehrbod Estaki 1,#, Lingjing Jiang 2,#, Nicholas A. Bokulich 3,4, Daniel McDonald 1, Antonio González 1, Tomasz Kosciolek 1,5, Cameron Martino 6,7, Qiyun Zhu 1, Amanda Birmingham 8, Yoshiki Vázquez-Baeza 7,9, Matthew R. Dillon 3, Evan Bolyen 3, J. Gregory Caporaso 3,4, Rob Knight 1,7,10,11*

1 Department of Pediatrics, University of California San Diego, La Jolla, CA, USA, 2 Division of Biostatistics, University of California San Diego, La Jolla, CA, USA, 3 Center for Applied Microbiome Science, Pathogen and Microbiome Institute, Northern Arizona University, Flagstaff, AZ, USA, 4 Department of Biological Sciences, Northern Arizona University, Flagstaff, AZ, USA, 5 Malopolska Centre of Biotechnology, Jagiellonian University, Kraków, Poland, 6 Bioinformatics and Systems Biology Program, University of California San Diego, La Jolla, CA, USA, 7 Center for Microbiome Innovation, University of California San Diego, La Jolla, CA, USA, 8 Center for Computational Biology and Bioinformatics, University of California San Diego, La Jolla, CA, USA, 9 Jacobs School of Engineering, University of California San Diego, La Jolla, CA, USA, 10 Department of Computer Science and Engineering, University of California San Diego, La Jolla, CA, USA, 11 Department of Bioengineering, University of California San Diego, La Jolla, CA, USA

* To whom correspondence should be addressed.

Rob Knight 9500 Gilman Dr. MC 0763 La Jolla, CA 92093 Phone: 858-246-1184 Email: robknight@ucsd.edu

# The first two authors should be regarded as Joint First Authors.

Abstract¶

QIIME 2 is a completely reengineered microbiome bioinformatics platform based on the popular QIIME platform, which it has replaced. QIIME 2 facilitates comprehensive and fully reproducible microbiome data science, improving accessibility to diverse users by adding multiple user interfaces. QIIME 2 can be combined with Qiita, an open-source web-based platform, to re-use available data for meta-analysis. The following protocol describes how to install QIIME 2 on a single computer and analyze microbiome sequence data, from processing of raw DNA sequence reads through generating publishable interactive figures. These interactive figures allow readers of a study to interact with data with the same ease as its authors, advancing microbiome science transparency and reproducibility. We also show how plug-ins developed by the community to add analysis capabilities can be installed and used with QIIME 2, enhancing various aspects of microbiome analyses such as improving taxonomic classification accuracy. Finally, we illustrate how users can perform meta-analyses combining different datasets using readily available public data through Qiita. In this tutorial, we analyze a subset of the Early Childhood Antibiotics and the Microbiome (ECAM) study, which tracked the microbiome composition and development of 43 infants in the United States from birth to two years of age, identifying microbiome associations with antibiotic exposure, delivery mode, and diet. For more information about QIIME 2, see https://qiime2.org. To troubleshoot or ask questions about QIIME 2 and microbiome analysis, join the active community at https://forum.qiime2.org.

Keywords: Microbiome, QIIME 2, bioinformatics, Qiita, metagenomics

Introduction¶

This tutorial illustrates the use of QIIME 2 (Bolyen et al., 2019) for processing, analyzing, and visualizing microbiome data. Here we use, as an example, a high-throughput 16S rRNA gene sequencing study, starting with raw sequences and producing publication-ready analysis and figures. QIIME 2 can also process other types of microbiome data, including amplicons of other markers such as 18S rRNA, ITS, and COI, shotgun metagenomics, and untargeted metabolomics. We will also show how to combine results from an individual study with data from other studies using the Qiita public database framework (Gonzalez et al., 2018), which can be used to confirm relationships between microbiome and phenotype variables in a new cohort, or to generate hypotheses for future testing.

A typical QIIME 2 analysis can vary in many ways, depending on your experimental and data analysis goals and on how you collected the data. In this tutorial, we use the QIIME 2 command-line interface, and focus on processing and analyzing a subset of samples from the Early Childhood Antibiotics and the Microbiome (ECAM) study (Bokulich, Chung, et al., 2016). We will start with raw sequence files and use a single analysis pipeline for clarity, but note where alternative methods are possible and why you might want to use them.

Before Starting¶

We recommend readers to follow the enhanced live version of this protocol (https://curr-protoc-bioinformatics.qiime2.org) which will be updated frequently to always reflect the newest release of QIIME 2. We also recommend that you read about the core concepts of QIIME 2 (https://docs.qiime2.org/2019.10/concepts/) before starting this tutorial to familiarize yourself with the platform’s main features and concepts, including enhanced visualization methods through QIIME 2 View, decentralized provenance tracking (which ensures reproducible bioinformatics), multiple interfaces (including the Python 3 API and QIIME 2 Studio graphical interface), the plugin architecture (which enables anyone to expand QIIME 2’s functionality), and semantic types (which enables QIIME 2 to help users avoid misusing their data). In general, we suggest referring to the QIIME 2 website (https://qiime2.org), which will always be the most up-to-date source for information and tutorials on QIIME 2, including newer versions of this tutorial. Questions, suggestions, and general discussion should always be directed to the QIIME 2 Forum (https://forum.qiime2.org). A brief ‘Glossary of terms’ for common QIIME 2 terminology is provided as Appendix 1.

USING QIIME 2 WITH MICROBIOME DATA¶

Necessary resources¶

Hardware: QIIME 2 can be installed on almost any computer system (native installation is available on Mac OS and Linux; or Windows via a virtual machine). The amount of free disk space and memory you will need vary dramatically depending on the number of samples and sequences you will analyze, and the algorithms you will use to do so. At present QIIME 2 requires a minimum of 6-7 GB for installation, and we recommend a minimum of 4 GB of memory as a starting point for small, and 8 GB of memory for most real-world datasets. Other types of analyses, such as those using shotgun metagenomics plugins, may require significantly more memory and disk space.

Software: An up-to-date web browser, such as the latest version of Firefox or Chrome is needed for the visualizations using QIIME 2 View.

Installing QIIME 2¶

The latest version of QIIME 2, as well as detailed instructions on how to install on various operating systems, can be found at https://docs.qiime2.org. QIIME 2 utilizes a variety of external independent packages, and while we strive to maintain backward compatibility, occasionally changes or updates to these external packages may create compatibility issues with older versions of QIIME 2. To avoid these problems we recommend always using the most recent version of QIIME 2 available online. The online tutorial will always provide installation instructions for the most up-to-date, tested, and stable version of QIIME 2.

Troubleshooting:

If you encounter any issues with installation, or at any other stages of this tutorial, please get in touch on the QIIME 2 Forum at https://forum.qiime2.org. The QIIME 2 Forum is the hub of the QIIME 2 user and developer communities. Technical support for users and developers is provided there, free of charge. We try to reply to technical support questions on the forum within 1-2 business days (though sometimes we need more time). Getting involved on the QIIME 2 Forum, for example by reading existing posts, answering questions, or sharing resources that you’ve created such as educational content, is a great way to get involved with QIIME 2. We strive to create an inclusive and welcoming community where we can collaborate to improve microbiome science. We hope you’ll join us!

(Re)Activating QIIME 2¶

If at any point during the analysis the QIIME 2 conda environment is closed or deactivated, QIIME 2 2019.10 can be reactivated by running the following command:

conda activate qiime2-2019.10

To determine the currently active conda environment, run the following command and look for the line that starts with “active environment”:

conda info

Using this tutorial¶

The following protocol was completed using QIIME 2 2019.10 and demonstrates usage with the command line interface (CLI). For users comfortable with Python 3 programming, an application programmer interface (API) version of this protocol is also available at https://github.com/qiime2/paper2/blob/master/notebooks/qiime2-protocol-API.ipynb. No additional software is needed for using the API. Jupyter notebooks for both of these protocols are also available at https://github.com/qiime2/paper2/tree/master/notebooks. Finally, an enhanced interactive live version of the CLI protocol is also available at https://curr-protoc-bioinformatics.qiime2.org with all intermediate files precomputed. While we strongly encourage users to install QIIME 2 and follow along with this tutorial, the enhanced live version provides an alternative for when time and computational resources are limited. Following along the live version of this protocol enables users to skip any step and instead download the pre-processed output required for a subsequent step. Additionally, the live version also provides simple ‘copy to clipboard’ buttons for each code block which, unlike copying from a PDF file, retains the original formatting of the code, making it easy to paste into other environments. The enhanced live protocol will also be updated regularly with every new release of QIIME 2, unlike the published version which will remain static with the 2019.10 version.

Acquire the data from the ECAM study¶

In this tutorial, we’ll be using QIIME 2 to perform cross-sectional as well as longitudinal analyses of human infant fecal microbiome samples. The samples we will be analyzing are a subset of the ECAM study, which consists of monthly fecal samples collected from children at birth up to 24 months of life, as well as corresponding fecal samples collected from the mothers throughout the same period. The original sequence files from this study are of the V4 region of the 16S rRNA gene that were sequenced across 5 separate runs (2x150 bp) on an Illumina MiSeq machine. To simplify and reduce the computational time required for this tutorial we have selected the forward reads of a subset of these samples for processing. To follow along with this protocol, create a new directory then download the raw sequences (~ 700 MB) and the corresponding sample metadata file into it.

mkdir qiime2-ecam-tutorial

cd qiime2-ecam-tutorial

Download URL: https://qiita.ucsd.edu/public_artifact_download/?artifact_id=81253

Save as: 81253.zip

wget \

-O "81253.zip" \

"https://qiita.ucsd.edu/public_artifact_download/?artifact_id=81253"

curl -sL \

"https://qiita.ucsd.edu/public_artifact_download/?artifact_id=81253" > \

"81253.zip"

unzip 81253.zip

mv mapping_files/81253_mapping_file.txt metadata.tsv

The bad CRC warnings here are fine to ignore. These are related to downloading

large files from Qiita and do not interfere with downstream work. You can also

delete the original zip file 81253.zip now to save space.

Explore sample metadata files¶

In the previous step, in addition to downloading sequence data, we downloaded

a researcher-generated sample metadata. In the context of a microbiome

study, sample metadata are any data that describe characteristics of the

samples that are being studied, the site they were collected from, and/or how

they were collected and processed. In this example, the ECAM study metadata include

characteristics like age at the time of collection, birth mode and diet of the

child, the type of DNA sequencing, and other information. This is all

information that is generally compiled at the time of sample collection, so

is something the researcher should be working on prior to a QIIME 2 analysis.

Suggested standards

for the type of study metadata to collect, and how to represent the values, are

discussed in detail in MIMARKS and MIxS (Yilmaz et al., 2011). In this

tutorial, we also include a Support Protocol on metadata preparation to help

users generate quality metadata. In QIIME 2, metadata is most commonly stored

as a TSV (i.e. tab-separated values) file. These files typically have a

.tsv or .txt file extension. TSV files are text files used to store

data tables, and the format can be read, edited and written by many types of

software, including spreadsheets and databases. Thus, it’s usually

straightforward to manipulate QIIME 2 sample metadata using the software of

your choosing. You can use a spreadsheet program of your choice such as Google

Sheets to edit and export your metadata files, but you must be extremely

cautious about automatic, and often silent, reformatting of values using these

applications. For example, the use of programs like Excel can lead to unwanted

reformatting of values, insertion of invisible spaces, or sorting of a table in

ways that scramble the connection between sample identifiers and the data.

These problems are very common and can lead to incorrect results, including

missing statistically significant patterns. See the “Metadata preparation”

section in the Support Protocols at the end of this document for details

regarding best practices for creating and maintaining metadata files.

Detailed formatting requirements for QIIME 2 metadata files can be found at https://docs.qiime2.org/2019.10/tutorials/metadata/. Metadata files stored in Google Sheets can be validated using Keemei (Rideout et al., 2016), an open-source Google Sheets plugin available at https://keemei.qiime2.org. Once Keemei is installed, in Google Sheets select Add-ons > Keemei > Validate QIIME 2 metadata file to determine whether the metadata file meets the required formatting of QIIME 2.

Open the metadata.tsv file with your software of choosing and explore the

content. Take note of the column names as we will be referring to these

throughout the protocol. Cual-ID may be useful for creating sample identifiers,

and the Cual-ID paper (Chase, Bolyen, Rideout, & Caporaso, 2016) provides some

recommendations on best practices for creating sample identifiers for data

management.

Importing DNA sequence data into QIIME 2 and creating a visual summary¶

The next step is to import our DNA sequence data (in this case, from the 16S

rRNA gene) into QIIME 2. All data used and generated by QIIME 2, with the

exception of metadata, exist as QIIME 2 artifacts, and use the .qza file

extension. Artifacts are zip files containing data (in the usual formats, such

as FASTQ) and QIIME 2-specific metadata describing the various characteristics

of the data such as its semantic type, data file format, relevant citations for

analysis steps that were performed to this point, and the QIIME 2 steps that

were taken to generate it (i.e., the data provenance).

QIIME 2 allows you to import and export data at many different steps, so that you can export it to other software or try out alternative methods for particular steps. When importing data into QIIME 2, you need to provide detail on what the data are, including the file format and the semantic type. Currently, the most common type of raw data from high-throughput amplicon sequencing is in FASTQ format. These files may contain single-end or paired-end DNA sequence reads, and will be in either multiplexed or demultiplexed format. Multiplexed files typically come as two (or three in the case of paired-end runs) files consisting of your sequences (forward and/or reverse, often but not always referred to as R1 and R2 reads, respectively) and a separate barcode file (often but not always referred to as the I1 reads). In demultiplexed format, you will have one (or two in the case of paired-end data) sequence files per sample as the sequences have already been assigned to their designated sample IDs based on the barcode files. For the demultiplexed format, the sample name will typically be a part of the file name. In this protocol our sequences are in single-end demultiplexed FASTQ format produced by Illumina’s Casava software. As our data is split across multiple files, to import we will need to provide QIIME 2 with the location of our files and assign them sample IDs; this is done using the manifest file. A manifest file is a user-created tab-separated values file with two columns: the first column sample-id holds the name you assign to each of your samples, and the second column absolute-filepath provides the absolute file path leading to your raw sequence files. For example:

sample-id absolute-filepath

10249.M001.03R $PWD/demux-se-reads/10249.M001.03R.fastq.gz

10249.M001.03SS $PWD/demux-se-reads/10249.M001.03SS.fastq.gz

10249.M001.03V $PWD/demux-se-reads/10249.M001.03V.fastq.gz

Alternatively, your sample metadata file can also double as a manifest file by adding the absolute-filepath column to it; in this protocol we demonstrate the creation and use of a separate manifest file. You can create a manifest file in a variety of ways using your favorite text editor application. Here we use a simple bash script to create ours.

Create the manifest file with the required column headers.

echo -e "sample-id\tabsolute-filepath" > manifest.tsv

Use a loop function to insert the sample names into the sample-id column and add the full paths to the sequence files in the absolute-filepath column.

for f in `ls per_sample_FASTQ/81253/*.gz`; do n=`basename $f`; echo -e "12802.${n%.fastq.gz}\t$PWD/$f"; done >> manifest.tsv

Use the manifest file to import the sequences into QIIME 2

qiime tools import \

--input-path manifest.tsv \

--type 'SampleData[SequencesWithQuality]' \

--input-format SingleEndFastqManifestPhred33V2 \

--output-path se-demux.qza

Alternative Pipeline:

Your data may not be demultiplexed prior to importing to QIIME 2. Instructions on how to import multiplexed FASTQ files, as well as a variety of other data types, can be found online at https://docs.qiime2.org/2019.10/tutorials/importing/. With multiplexed data, you will also need to demultiplex your sequences prior to the next step. Demultiplexing in QIIME 2 can be performed using either the q2-demux (https://docs.qiime2.org/2019.10/plugins/available/demux/) plugin which is optimized for data produced using the EMP protocol (Caporaso et al., 2012), or the q2-cutadapt (https://docs.qiime2.org/2019.10/plugins/available/cutadapt/) plugin (which additionally supports demultiplexing of dual-index barcodes using cutadapt (Martin, 2011))

The demultiplexed artifact allows us to create an interactive summary of our sequences. This summary provides information useful for assessing the quality of the DNA sequencing run, including the number of sequences that were obtained per sample, and the distribution of sequence quality scores at each position.

Create a summary of the demultiplexed artifact:

qiime demux summarize \

--i-data se-demux.qza \

--o-visualization se-demux.qzv

You’ll notice that the output of the summarize action above is a Visualization,

with the file extension .qzv. Visualizations are a type of QIIME 2 Result. Like

Artifacts, the other type of QIIME 2 Result, they contain information such as

metadata, provenance, and relevant citations, but they are outputs that cannot

be used as input to other analyses in QIIME 2. Instead, they are intended for

human consumption. Visualizations often contain a statistical results table, an

interactive figure, one or more static images, or a combination of these.

Because they don’t need to be used for downstream data analysis in QIIME 2,

there is a lot of flexibility in what they can contain. All QIIME 2 Results,

including Visualizations and Artifacts, can be viewed by running qiime tools

view or alternatively by loading them with QIIME 2 View

(https://view.qiime2.org/). QIIME 2 View does not require QIIME 2 to be

installed, making it useful for sharing data with collaborators who do not have

QIIME 2 installed. Try visualizing se-demux.qzv using each of these methods,

then use the method you prefer for the rest of this tutorial.

qiime tools view se-demux.qzv

Explore the Visualization results:

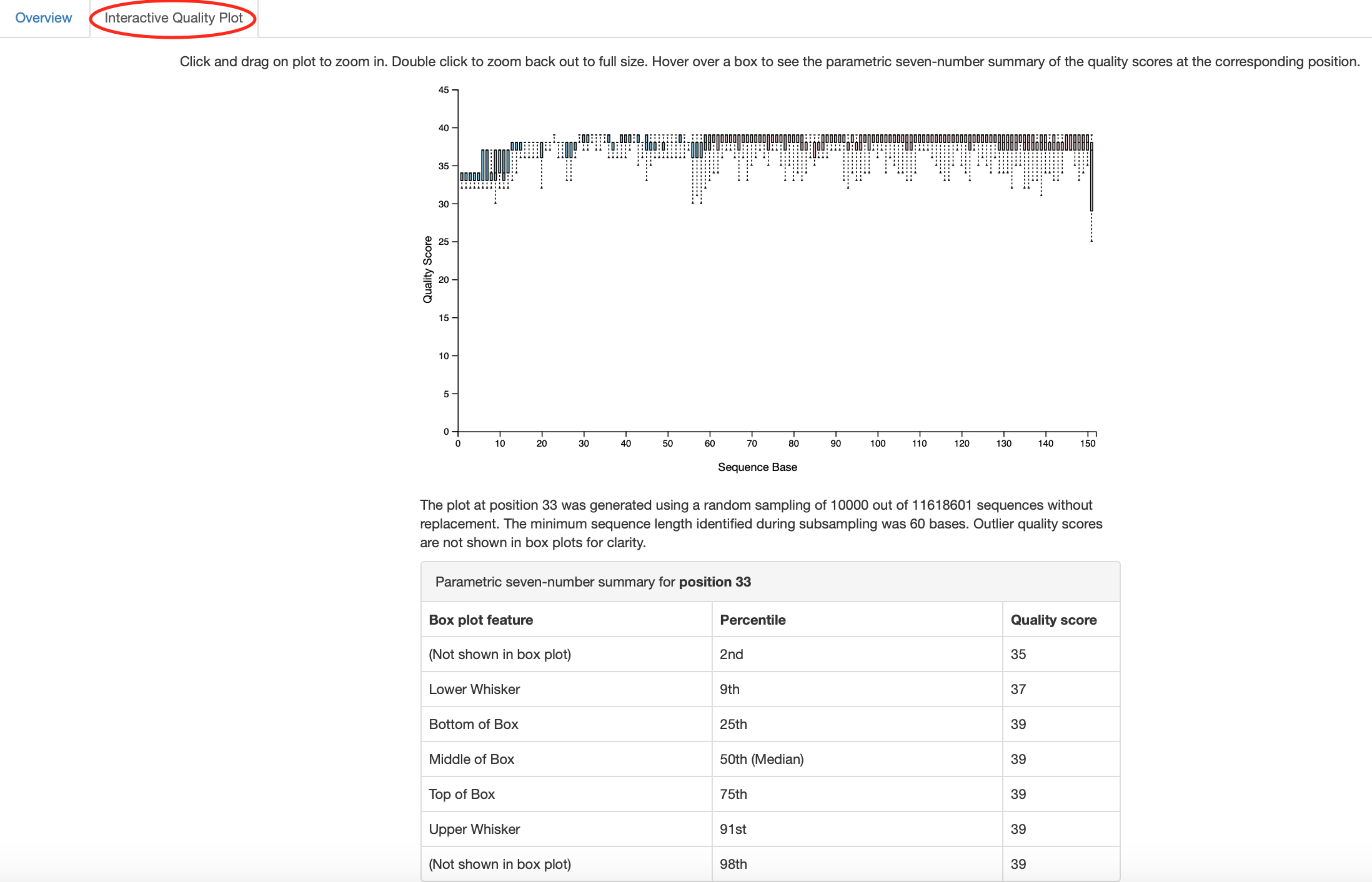

In the first ‘Overview’ tab we see a summary of our sequence counts followed by

a per-sample breakdown. If you click on the ‘Interactive Quality plot’ tab (Figure

1), you can interact with the sequence quality plot, which shows a boxplot of

the quality score distribution for each position in your input sequences.

Because it can take a while to compute these distributions from all of your

sequence data (often tens of millions of sequences), a subset of your reads are

selected randomly (sampled without replacement), and the quality scores of only

those sequences are used to generate the boxplots. By default, 10,000

sequences are subsampled, but you can control that number with --p-n on the

demux summarize command. Keep in mind that because of this random subsampling,

every time you run demux summarize on the same sequence data you will obtain

slightly different plots.

Click and drag on plot to zoom in. When you hover the mouse over a boxplot for a given base position, the boxplot’s data is shown in a table below the interactive plot as a parametric seven-number summary This is a standard summary statistics of a dataset composed of 2nd, 9th, 25th, 50th, 75th, 91st, and 98th percentiles and can be used as a simple check for assumptions of normality. These values describe the distribution of quality scores at that position in your subsampled sequences. You can click and drag on the plot to zoom in, or double click to zoom back out to full size. These interactive plots can be used to determine if there is a drop in quality at some point in your sequences, which can be useful in choosing truncation and trimming parameters in the next section.

Sequence quality control and feature table construction¶

Traditionally, quality control of sequences was performed by trimming and filtering sequences based on their quality scores (Bokulich et al., 2013), followed by clustering them into operational taxonomic units (OTUs) based on a fixed dissimilarity threshold, typically 97% (Rideout et al., 2014). Today, there are better methods for quality control that correct amplicon sequence errors and produce high-resolution amplicon sequence variants that, unlike OTUs, resolve differences of as little as one nucleotide. These “denoisers” have many advantages over traditional clustering-based methods, as discussed in (Callahan, McMurdie, & Holmes, 2017). QIIME 2 currently offers denoising via DADA2 (q2-dada2) and Deblur (q2-deblur) plugins. The inferred ESVs produced by DADA2 are referred to as amplicon sequence variants (ASVs), while those created by Deblur are called sub-OTUs (sOTUs). In this protocol we will refer to products of these denoisers, regardless of their method of origin, as features. The major differences in the algorithms and motivation for these and other denoising methods are reviewed in Nearing et al. (Nearing, Douglas, Comeau, & Langille, 2018) and Caruso et al. (Caruso, Song, Asquith, & Karstens, 2019). According to these independent evaluations, denoising methods were consistently more successful than clustering methods in identifying true community composition while only small differences were reported among the denoising methods. We therefore view method selection here as a personal choice that research teams should make. Some practical differences may drive selection of these methods. For instance, DADA2 includes joining of paired-end reads in its processing workflow and is therefore simpler to use when paired-end read joining is desired, while Deblur users must join reads independently prior to denoising using other plugins such as q2-vsearch’s join-pairs method (Rognes, Flouri, Nichols, Quince, & Mahژ, 2016).

In this tutorial, we’ll denoise our sequences with q2-deblur which uses a pre-calculated static sequence error profile to associate erroneous sequence reads with the true biological sequence from which they are derived. Unlike DADA2, which creates sequence error profiles on a per analysis basis, this allows Deblur to be simultaneously applied across different datasets, reflecting its design motivation for performing meta-analyses. Additionally, using a pre-defined error profile generally results in shorter runtimes.

Deblur is applied in two steps.

Apply an initial quality filtering process based on quality scores. This method is an implementation of the quality filtering approach described by Bokulich et al. (Bokulich et al., 2013).

qiime quality-filter q-score \

--i-demux se-demux.qza \

--o-filtered-sequences demux-filtered.qza \

--o-filter-stats demux-filter-stats.qza

Apply the Deblur workflow using the denoise-16S action. This method requires one parameter that is used in quality filtering,

--p-trim-lengthwhich truncates the sequences at position n. The choice of this parameter is based on the subjective assessment of the quality plots produced from the previous step. In general, we recommend setting this value to a length where the median quality score begins to drop below 30, or 20 if the overall run quality is too low. One situation where you might deviate from that recommendation is when performing a meta-analysis across multiple sequencing runs. In this type of meta-analysis, it is critical that the read lengths be the same for all of the sequencing runs being compared to avoid introducing a study-specific bias. In the current example dataset, our quality plot shows high quality scores along the full length of our reads, therefore it is reasonable to truncate our reads at the 150 bp position.

qiime deblur denoise-16S \

--i-demultiplexed-seqs demux-filtered.qza \

--p-trim-length 150 \

--p-sample-stats \

--p-jobs-to-start 4 \

--o-stats deblur-stats.qza \

--o-representative-sequences rep-seqs-deblur.qza \

--o-table table-deblur.qza

Output artifacts:

Tip!

The denoising step is often one of the longest steps in microbiome analysis

pipelines. Luckily, both DADA2 and Deblur are parallelizable, meaning you

can significantly reduce computation time if your machine has access to

multiple cores. To increase the number of cores you wish to designate to

this task, use the --p-jobs-to-start parameter to change the default

value of 1 to a value suitable to your machine. As a reminder, if you are

following the online version of this protocol, you can skip this step and

download the output artifacts and use those in the following steps.

Deblur generates three outputs. An artifact with the semantic type

FeatureTable[Frequency], which is a table of the count of each observed

feature in each sample, and an artifact with the semantic type

FeatureData[Sequence], which contains the sequence that defines each

feature in the table which will be used later for assigning taxonomy to

features and generating a phylogenetic tree, and summary statistics of the

Deblur run in a DeblurStats artifact. Each of these artifacts can be visualized

to provide important information.

Create a visualization summary of the DeblurStats artifact with the command:

qiime deblur visualize-stats \

--i-deblur-stats deblur-stats.qza \

--o-visualization deblur-stats.qzv

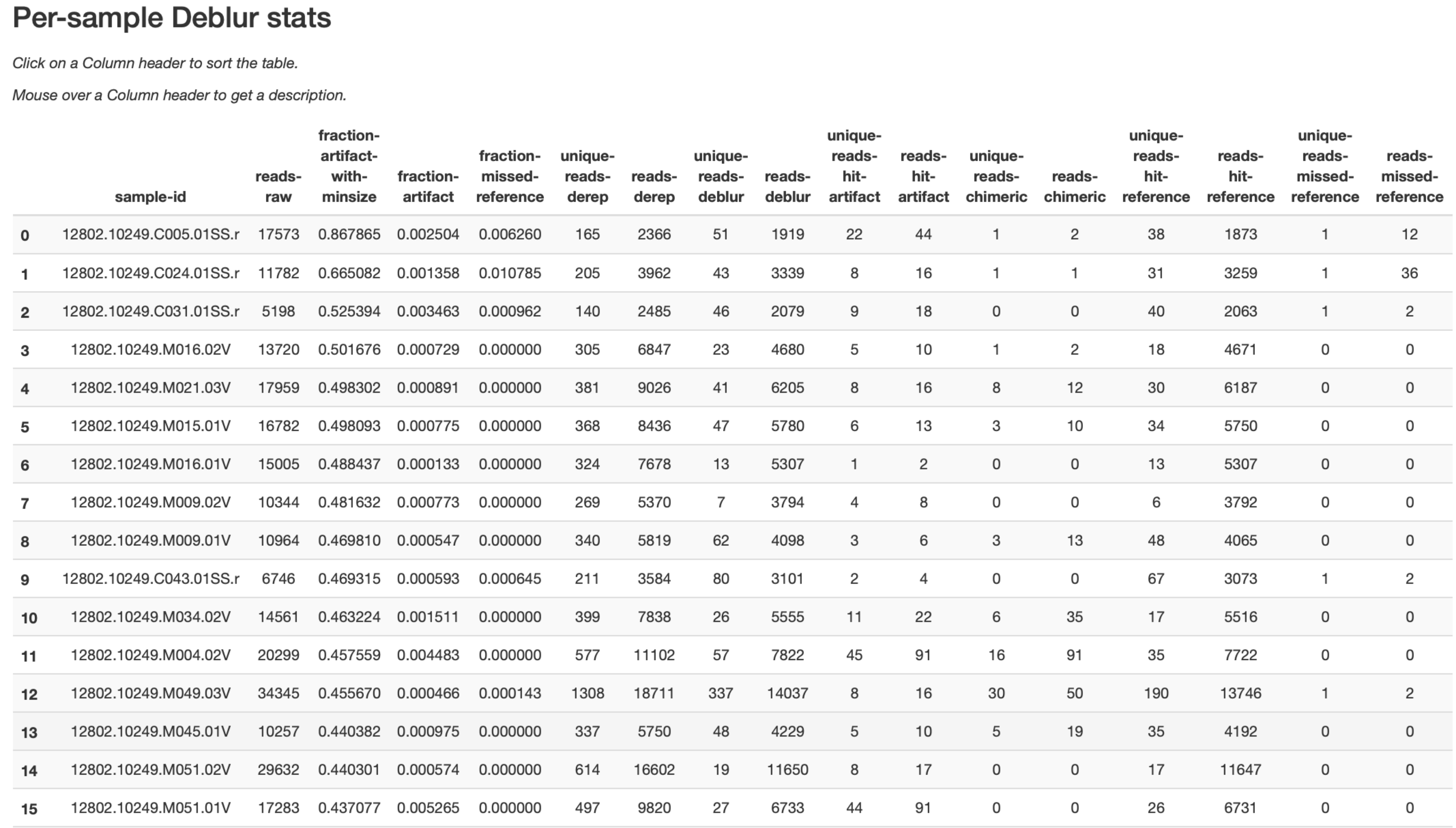

The statistics summary (Figure 2) provides us with information about what

happened to each of the samples during the deblur process. The reads-raw column

gives information on the number of reads presented to the deblur algorithm.

Because deblur works by deleting erroneous reads that it detects, the final

number of reads is smaller than the starting number. The three columns that

follow (fraction-artifact-with-minsize, fraction-artifact and

fraction-missed-reference) summarize the data from other columns in a

convenient way. They identify potential problems with the data at an early

stage. Fraction-artifact-with-minsize is the fraction of sequences detected as

artifactual, including those that fall below the minimum length threshold

(specified by the --p-trim-length parameter). Fraction-artifact is the

fraction of raw sequences that were identified as artifactual.

Fraction-missed-reference is the fraction of post-deblur sequences that were

not recruited by the positive reference database. The subsequent columns

provide information about the number of sequences remaining after dereplication

(unique-reads-derep, reads-derep), following deblurring (unique-reads-deblur,

reads-deblur), number of hits that recruited to the negative reference database

following deblurring process (unique-reads-hit-artifact, reads-hit-artifact),

chimeric sequences detected (unique-reads-chimeric and reads-chimeric),

sequences that match/miss the positive reference database

(unique-reads-hit-reference, reads-hit-reference, unique-reads-missed-reference

and reads-missed-reference). The number in the reads-hit-reference column is

the final number of per-sample sequences present in the table-deblur.qza

QIIME 2 artifact.

Note

The shorthand “artifact” in the per-sample Deblur statistics denotes artifactual sequences (i.e. those erroneously generated as byproducts of the PCR and DNA sequencing process), not a QIIME 2 artifact (i.e. a valid data product of QIIME 2).

Visualize the representative sequences by entering:

qiime feature-table tabulate-seqs \

--i-data rep-seqs-deblur.qza \

--o-visualization rep-seqs-deblur.qzv

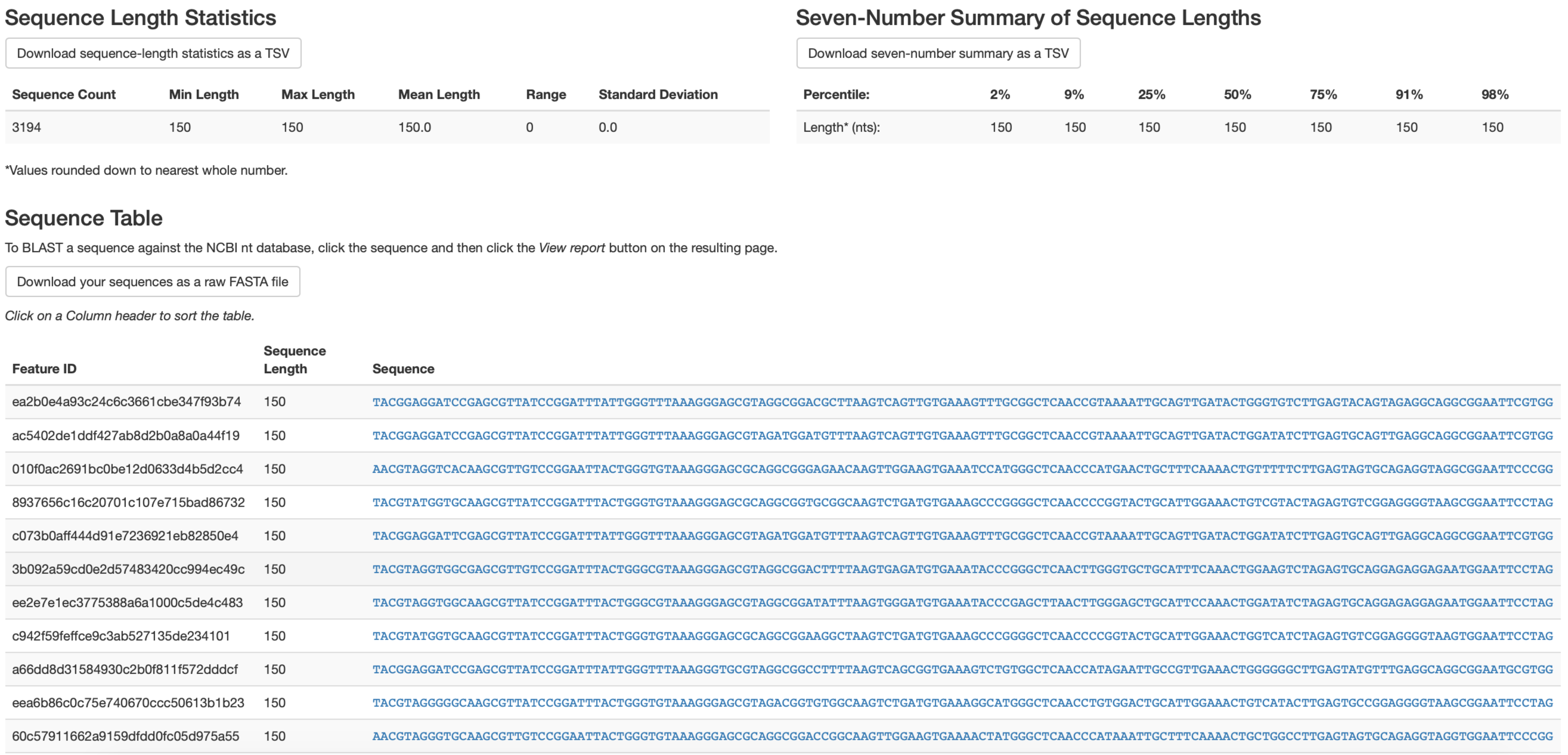

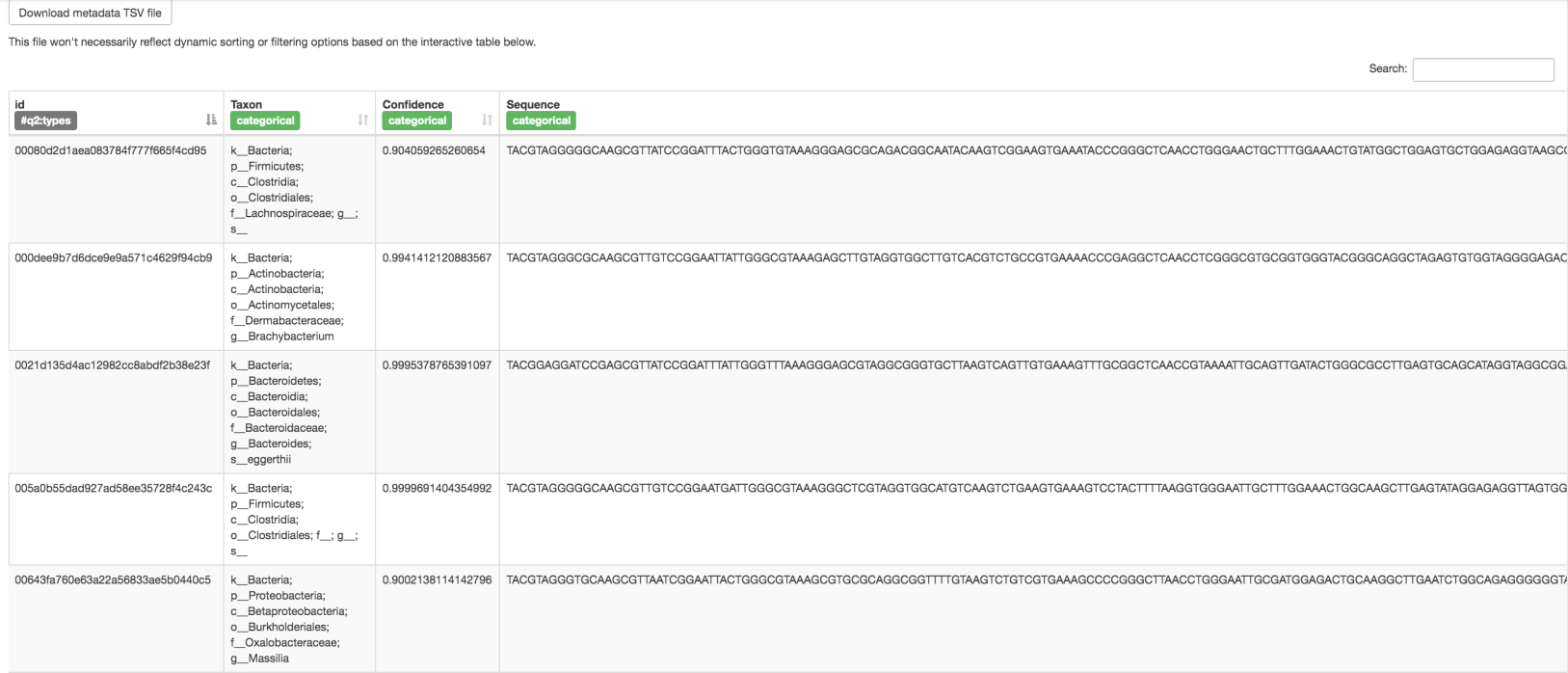

This Visualization (Figure 3) will provide statistics and a seven-number summary of sequence lengths, and more importantly, show a sequence table that maps feature IDs to sequences, with links that allow you to easily BLAST each sequence against the NCBI nt database. To BLAST a sequence against the NCBI nt database, click the sequence and then click the View report button on the resulting page. This will be useful later in the tutorial, when you want to learn more about specific features that are important in the data set. Note that automated taxonomic classification is performed at a later step, as described below; the NCBI-BLAST links provided in this Visualization are useful for assessing the taxonomic affiliation and alignment of individual features to the reference database. Results of the ‘top hits’ from a simple BLAST search such as this are known to be poor predictors of the true taxonomic affiliations of these features, especially in cases where the closest reference sequence in the database is not very similar to the sequence you are using as a query.

Note

By default, QIIME 2 uses MD5 hashing of a feature’s full sequence to assign

a feature ID. These are the 32-bit strings of numbers and characters you

see in the Feature ID column above. Hashing in q2-deblur can be disabled by

adding the --p-no-hashed-feature-ids parameter.

Visualize the feature table. Note that in this step, we can provide our metadata file, which then adds information about sample groups into the resulting summary output. Adding the metadata is useful for checking that all groups (e.g. a given age or sex of subject) have enough samples and sequences to proceed with analysis. This check is important because variation in the number of sequences per sample, which is typically not fully under control, often leads to samples being dropped from the analysis because too few reads were obtained from them.

qiime feature-table summarize \

--i-table table-deblur.qza \

--m-sample-metadata-file metadata.tsv \

--o-visualization table-deblur.qzv

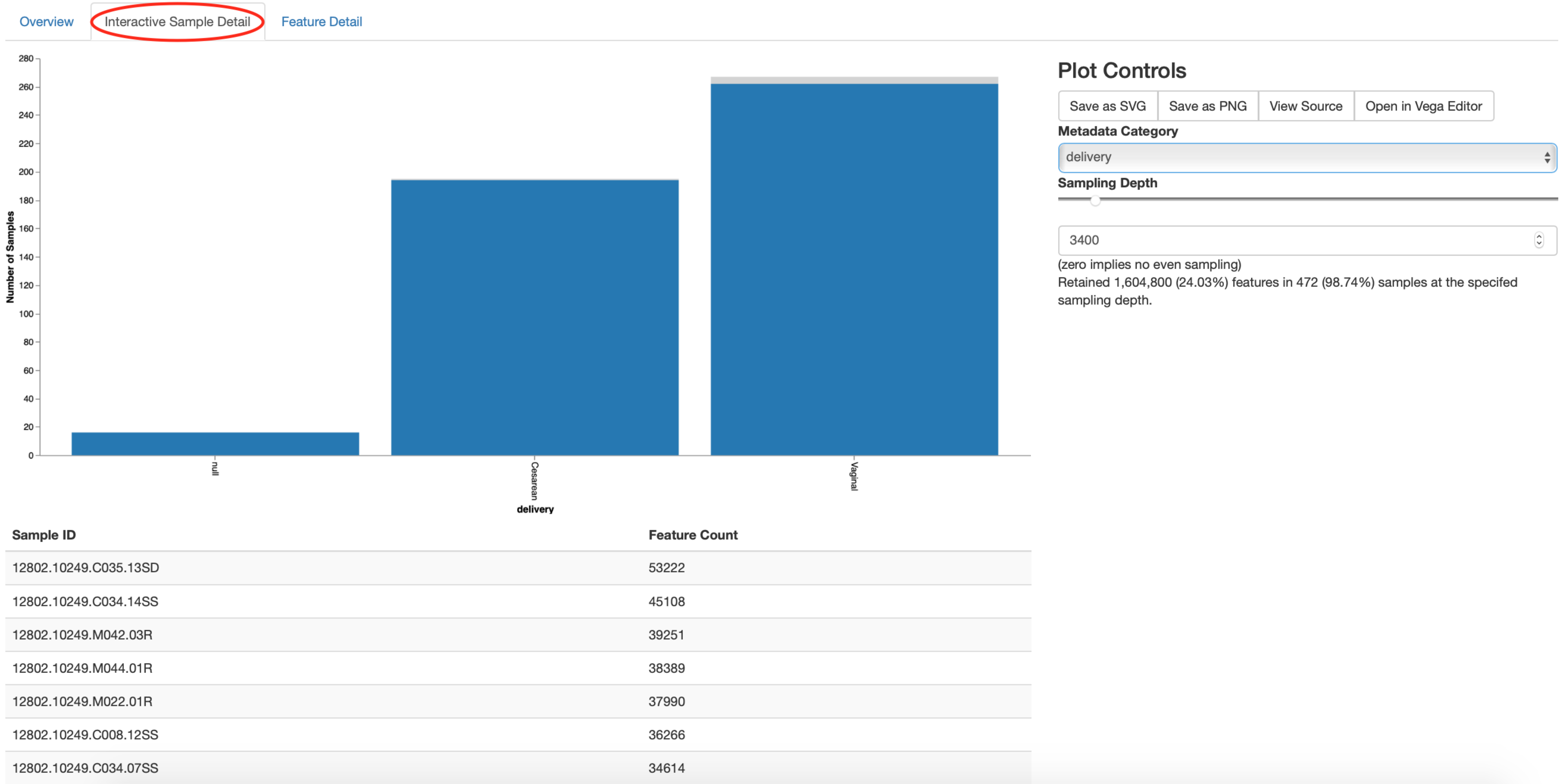

The first ‘Overview’ tab gives information about how many sequences come from each sample, histograms of those distributions, and related summary statistics. The ‘Interactive Sample Detail’ tab (Figure 4) shows a bar plot of the number of samples associated with the metadata category of interest, and the feature count in each sample is shown in the table below. Note that you can choose the metadata categories and change sampling depth by dragging the bar or typing in the value. The ‘Feature Detail’ tab shows the frequency and number of observed samples associated with each feature.

Alternative Pipeline:

If traditional OTU clustering methods are desired, QIIME 2 users can perform these using the q2-vsearch plugin (Rognes et al., 2016): https://docs.qiime2.org/2019.10/plugins/available/vsearch/. However, we recommend that denoising methods be used prior to clustering in order to utilize the superior quality-control procedures within these tools.

Generating a phylogenetic tree¶

Although microbiome data can be analyzed without a phylogenetic tree, many commonly used diversity analysis methods such as Faith’s phylogenetic diversity (Faith, 1992) and UniFrac (C. Lozupone & Knight, 2005) require one. To use these methods, we must construct a phylogenetic tree that allows us to consider evolutionary relatedness between the DNA sequences.

QIIME 2 offers several methods for reconstructing phylogenetic trees based on features found in your data. These include several variants of traditional alignment-based methods of building a de novo tree, as well as a fragment insertion method that aligns your features against a reference tree. It should be noted that de novo trees reconstructed from short sequences result in low quality trees because the sequences do not contain enough information to give the correct evolutionary relationships over large evolutionary distances, and thus should be avoided when possible (Janssen et al., 2018). For this tutorial, we will use the fragment insertion tree building method as described by Janssen et al. (Janssen et al., 2018) using the sepp action of the q2-fragment-insertion plugin, which has been shown to outperform traditional alignment-based methods with short 16S amplicon data. This method aligns our unknown short fragments to full length sequences in a known reference database and then places them onto a fixed tree. Note that this plugin has only been tested and benchmarked on 16S data against the Greengenes reference database (McDonald et al., 2012), so if you are using different data types you should consider the alternative methods mentioned in the box below.

Download a backbone tree as the base for our features to be inserted onto. Here, we use the greengenes (16s rRNA) reference database.

wget -O "sepp-refs-gg-13-8.qza" \

"https://data.qiime2.org/2019.10/common/sepp-refs-gg-13-8.qza"

Create an insertion tree by entering the following commands:

qiime fragment-insertion sepp \

--i-representative-sequences rep-seqs-deblur.qza \

--i-reference-database sepp-refs-gg-13-8.qza \

--p-threads 4 \

--o-tree insertion-tree.qza \

--o-placements insertion-placements.qza

The newly formed insertion-tree.qza is stored as a rooted phylogenetic tree (of

semantic type Phylogeny[Rooted] and can be used in downstream analysis

for phylogenetic diversity computations.

Tip!

Building a tree using SEPP can be computationally demanding and often has

longer run times than most steps in a typical microbiome analysis pipeline.

The --p-threads parameter which, similar to the --p-jobs-to-start

parameter from q2-deblur, allows this action to be performed in parallel

across multiple cores, significantly reducing run time. See the developers’

recommendations with regards to run-time optimization at

https://github.com/qiime2/q2-fragment-insertion#expected-runtimes. As a

reminder, if you are following the online version of this protocol, you

can skip this step and download the output artifacts and use those in the

following steps.

Once the insertion tree is created, you must filter your feature table so that it only contains fragments that are in the insertion tree. This step is needed because SEPP might reject the insertion of some fragments, such as erroneous sequences or those that are too distantly related to the reference alignment and phylogeny. Features in your feature-table without a corresponding phylogeny will cause diversity computation to fail, because branch lengths cannot be determined for sequences not in the tree.

Filter your feature-table by running the following:

qiime fragment-insertion filter-features \

--i-table table-deblur.qza \

--i-tree insertion-tree.qza \

--o-filtered-table filtered-table-deblur.qza \

--o-removed-table removed-table.qza

This command generates two feature-tables: The filtered-table-deblur.qza

contains only features that are also present in the tree while the

removed-table.qza contains features not present in the tree. Both of these

tables can be visualized as shown in Step 5 of the previous section.

Alternative Pipeline:

If a traditional de novo phylogenetic tree is desired/required, QIIME 2 offers several methods (FastTree (Price, Dehal, & Arkin, 2010), IQ-TREE (Nguyen, Schmidt, von Haeseler, & Minh, 2015) and RAxML (Stamatakis, 2014) to reconstruct these using the q2-phylogeny plugin (https://docs.qiime2.org/2019.10/plugins/available/phylogeny/). A tree produced by any of these alignment-based methods can be used with your original feature-table without the need for the filtering that SEPP requires. However, if some of your sequences are not 16S rRNA genes, the tree will be incorrect in ways that may severely affect your results.

Visualize the phylogenetic tree.

The phylogenetic tree artifact (semantic type: Phylogeny[Rooted])

produced in this step can be readily visualized using q2-empress

(https://github.com/biocore/empress) or iTOL’s (Letunic & Bork, 2019)

interactive web-based tool by simply uploading the artifact at

https://itol.embl.de/upload.cgi. The underlying tree, in Newick format, can

also be easily exported for use in your application of choice (see the

“Exporting QIIME 2 data” section in Supporting Protocols.

Taxonomic classification¶

While sequences derived from denoising methods provide us with the highest possible resolution of our features given our sequencing data, it is usually desirable to know the taxonomic affiliation of the microbes from which sequences were obtained. QIIME 2 provides several methods to predict the most likely taxonomic affiliation of our features through the q2-feature-classifier plugin (Bokulich, Kaehler, et al., 2018). These include both alignment-based consensus methods and Naive Bayes (and other machine-learning) methods. In this tutorial we will use a Naive Bayes classifier, which must be trained on taxonomically-defined reference sequences covering the target region of interest. Some pre-trained classifiers are available through the QIIME 2 Data Resources page (https://docs.qiime2.org/2019.10/data-resources/) and some have been made available by users on the QIIME 2 Community Contributions channel (https://forum.qiime2.org/c/community-contributions). If a pre-trained classifier suited for your region of interest or reference database is not available through these resources, you can train your own by following the online tutorial (https://docs.qiime2.org/2019.10/tutorials/feature-classifier/). In the current protocol we will train a classifier specific to our data that (optionally), which also incorporates environment-specific taxonomic abundance information to improve species inference. This bespoke method has been shown to improve classification accuracy (Kaehler et al., 2019) when compared to traditional Naive-Bayes classifiers which assume that all species in the reference database are equally likely to be observed in your sample (i.e. that seafloor microbes are just as likely to be found in a stool sample as microbes usually associated with stool).

To train a classifier using this bespoke method, we need 3 files: (1) a set of reference reads (2) a reference taxonomy, and (3) taxonomic weights. Taxonomic weights can be customized for specific sample types and reference data using the q2-clawback plugin (Kaehler et al., 2019) (see alternative pipeline recommendation below), or we can obtain pre-assembled taxonomic weights from the readytowear collection (https://github.com/BenKaehler/readytowear). This collection also contains the reference reads and taxonomies required. The taxonomic weights used in this tutorial have been assembled with 16S rRNA gene sequence data using the Greengenes reference database trimmed to the V4 domain (bound by the 515F/806R primer pair as used in the ECAM study). Here, we will use the pre-calculated taxonomic weights specific to human stool data. For other sample types, make sure to pick the appropriate weights best fit for your data, and the appropriate sequence reference database; a searchable inventory of available weights is available at https://github.com/BenKaehler/readytowear/blob/master/inventory.tsv.

Start by downloading the three required files from the inventory:

Download URL: https://github.com/BenKaehler/readytowear/raw/master/data/gg_13_8/515f-806r/human-stool.qza

Save as: human-stool.qza

wget \

-O "human-stool.qza" \

"https://github.com/BenKaehler/readytowear/raw/master/data/gg_13_8/515f-806r/human-stool.qza"

curl -sL \

"https://github.com/BenKaehler/readytowear/raw/master/data/gg_13_8/515f-806r/human-stool.qza" > \

"human-stool.qza"

Download URL: https://github.com/BenKaehler/readytowear/raw/master/data/gg_13_8/515f-806r/ref-seqs-v4.qza

Save as: ref-seqs-v4.qza

wget \

-O "ref-seqs-v4.qza" \

"https://github.com/BenKaehler/readytowear/raw/master/data/gg_13_8/515f-806r/ref-seqs-v4.qza"

curl -sL \

"https://github.com/BenKaehler/readytowear/raw/master/data/gg_13_8/515f-806r/ref-seqs-v4.qza" > \

"ref-seqs-v4.qza"

Download URL: https://github.com/BenKaehler/readytowear/raw/master/data/gg_13_8/515f-806r/ref-tax.qza

Save as: ref-tax.qza

wget \

-O "ref-tax.qza" \

"https://github.com/BenKaehler/readytowear/raw/master/data/gg_13_8/515f-806r/ref-tax.qza"

curl -sL \

"https://github.com/BenKaehler/readytowear/raw/master/data/gg_13_8/515f-806r/ref-tax.qza" > \

"ref-tax.qza"

Train a classifier using these files:

qiime feature-classifier fit-classifier-naive-bayes \

--i-reference-reads ref-seqs-v4.qza \

--i-reference-taxonomy ref-tax.qza \

--i-class-weight human-stool.qza \

--o-classifier gg138_v4_human-stool_classifier.qza

Output artifacts:

Assign taxonomy to our representative sequences using our newly trained classifier:

qiime feature-classifier classify-sklearn \

--i-reads rep-seqs-deblur.qza \

--i-classifier gg138_v4_human-stool_classifier.qza \

--o-classification bespoke-taxonomy.qza

This new bespoke-taxonomy.qza data artifact is a FeatureData[Taxonomy]

type which can be used as input in any plugins that accept taxonomic

assignments.

Visualize our taxonomies by entering the following:

qiime metadata tabulate \

--m-input-file bespoke-taxonomy.qza \

--m-input-file rep-seqs-deblur.qza \

--o-visualization bespoke-taxonomy.qzv

The Visualization (Figure 5) shows the classified taxonomic name for each

feature ID, with additional information on confidence level and sequences. You

can reorder the table by clicking the sorting button next to each column name.

Recall that the rep-seqs.qzv Visualization we created above allows you to

easily BLAST the sequence associated with each feature against the NCBI nt

database. Using that Visualization and the bespoke-taxonomy.qzv

Visualization created here, you can compare the taxonomic assignments of

features of interest with those from BLAST’s top hit. Because these methods are

only estimates, it is not uncommon to find disagreements between the predicted

taxonomies. The results here will generally be more accurate than those

received from the simple BLAST search linked from the rep-seqs.qzv

Visualization.

Alternative Pipeline:

To assemble your own taxonomic weights for regions not available in the readytowear inventory, follow the detailed instructions outlined at https://forum.qiime2.org/t/using-q2-clawback-to-assemble-taxonomic-weights

Filtering data¶

So far, in addition to our sample metadata, we have obtained a

quality-controlled FeatureTable[Frequency], a Phylogeny[Rooted], and a

FeatureData[Taxonomy] artifact. We are now ready to explore our microbial

communities and perform various statistical tests. In the following sections we

will explore the microbial communities of our samples from children only, and

thus will separate these samples from those of the mothers.

QIIME 2 provides numerous methods to filter your data. These include total feature frequency-based filtering, identity-based filtering, metadata-based filtering, taxonomy-based filtering etc. Filtering is performed through the q2-feature-table plugin. For a comprehensive list of available filtering methods and examples on how to perform them visit https://docs.qiime2.org/2019.10/tutorials/filtering/. To separate the child samples we will use the filter-samples action to separate samples based on the metadata column “mom_or_child”, where a value of “C” represents a child sample.

qiime feature-table filter-samples \

--i-table filtered-table-deblur.qza \

--m-metadata-file metadata.tsv \

--p-where "[mom_or_child]='C'" \

--o-filtered-table child-table.qza

We now have a new subsetted feature table consisting of child samples only. Let’s visualize this new feature table as we did previously:

qiime feature-table summarize \

--i-table child-table.qza \

--m-sample-metadata-file metadata.tsv \

--o-visualization child-table.qzv

Load this new Visualization artifact and keep it open, as we will be referring to this in the following section.

Alpha rarefaction plots¶

One of the first steps in a typical microbiome analysis pipeline is to evaluate

the sampling depth of our samples to determine whether sufficient surveying

effort has been achieved. Sampling depth will naturally differ between samples,

because the number of sequences generated by current sequencing instruments are not

evenly distributed among samples nor correlated with sample biomass, and

therefore, to avoid bias, must be normalized prior to analysis (e.g., diversity

estimates as described below). The methods used for normalization are an active

area of research and debate (McMurdie & Holmes, 2014; Weiss et al., 2017). In

this section we’ll explore how sampling depth impacts alpha diversity estimates

(within-sample richness, discussed in more detail below) using the

alpha-rarefaction action within the q2-diversity plugin. This visualizer

computes one or more alpha diversity metrics at multiple sampling depths, in

steps between 1 (optionally controlled with --p-min-depth) and the value

provided as --p-max-depth. At each sampling depth step, 10 rarefied tables

will be generated by default, and the diversity metrics will be computed for

all samples in the tables. The number of iterations (rarefied tables computed

at each sampling depth) can be controlled with --p-iterations. Average

diversity values will be plotted for each sample at each even sampling depth,

and samples can be grouped based on metadata categories in the resulting

Visualization if sample metadata is provided with the --m-metadata-file

parameter.

qiime diversity alpha-rarefaction \

--i-table child-table.qza \

--i-phylogeny insertion-tree.qza \

--p-max-depth 10000 \

--m-metadata-file metadata.tsv \

--o-visualization child-alpha-rarefaction.qzv

Load the child-alpha-rarefaction.qzv Visualization.

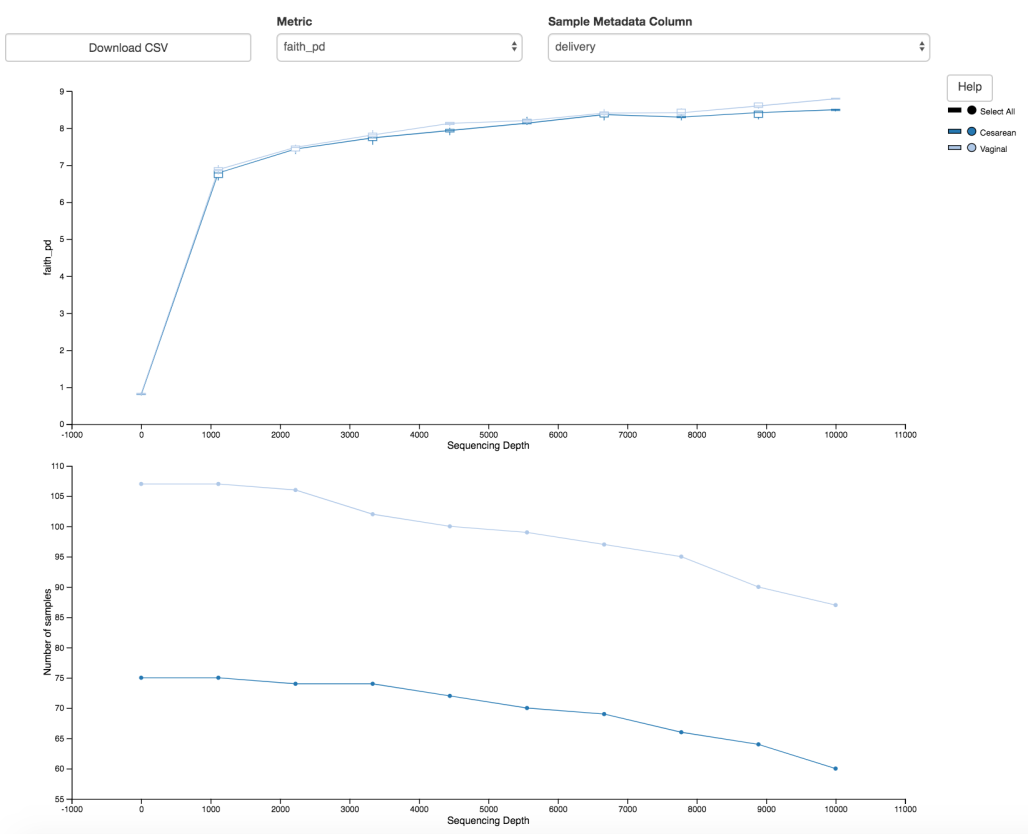

The resulting Visualization (Figure 6) has two plots. The top plot is an alpha rarefaction plot, and is primarily used to determine if the within diversity has been fully captured. If the lines in the plot appear to “level out” (i.e., approach a slope of zero) at some sampling depth along the x-axis, this suggests that collecting additional sequences is unlikely to result in any significant changes to our samples’ estimated diversity. If the lines in a plot do not level out, the full diversity of the samples may not have been captured by our sampling efforts, or it could indicate that a lot of sequencing errors remain in the data (which is being mistaken for novel diversity).

The bottom plot in this visualization is important when grouping samples by our

metadata categories. It illustrates the number of samples that remain in each

group when the feature table is rarefied to each sampling depth. If a given

sampling depth d is larger than the total frequency of a sample s

(i.e., the number of sequences that were obtained for sample s), it is not

possible to compute the diversity metric for sample s at sampling depth

d. If many of the samples in a group have lower total frequencies than

d, the average diversity presented for that group at d in the top plot

will be unreliable because it will have been computed on relatively few

samples. When grouping samples by metadata, it is therefore essential to look

at the bottom plot to ensure that the data presented in the top plot is

reliable. Try using the drop-down menus at the top of the plots to switch

between the different calculated diversity metrics and metadata categories.

As mentioned earlier, a normalization method to account for unequal sampling depth across samples in microbiome data is essential to avoid the introduction of bias. One common approach to dealing with this problem is to sample a random subset of sequences without replacement for each sample at a fixed depth (also referred to as rarefying) and discard all remaining samples with a total read counts below that threshold. This approach, which is not ideal because it discards a large amount of information (McMurdie & Holmes, 2014), has nonetheless been shown to be useful for many different microbial community analyses that are otherwise dominated by sample-to-sample variation in the number of sequences per sample obtained (Weiss et al., 2017). Selecting the depth to which to rarefy samples to is a subjective decision motivated by the desire to maximize the rarefying threshold while minimizing loss of samples due to insufficient coverage.

Let’s consider our current dataset as an example. In the rarefaction plots

above we can see that there is a natural leveling of our diversity metrics

starting at 1,000 sequences/sample, with limited additional increases observed

beyond 3,000 sequences/sample. This should be our target minimum sampling

depth. Now let’s revisit the child-table.qzv Visualization from the

Filtering data step. Select the ‘Interactive-Sample Detail’ tab from the top

left corner, and use the Metadata Category drop-down menu to select month.

Hover over each bar in the plot to see the number of samples included at each

month. Now try moving the Sampling Depth bar on the right starting from the

left (zero) to the right. You’ll see that as the sampling depth increases we

begin to rapidly lose samples as shown by the grayed areas in the bar plot. In

this dataset, the time point 0 month is better represented than the subsequent

months. We would therefore ideally minimize discarding samples from the other

underrepresented months to maintain sufficient statistical power in downstream

analyses. Start moving the Sampling Depth bar from zero again, this time stop

at the first instance where we begin to see a loss of sample at a month that is

not 0. Now scroll down to the bottom of the page. The samples highlighted in

red are the would-be discarded samples at that chosen sampling depth. Here we

see that at a depth of exactly 3,400 we are able to retain all the samples from

months 6, 12, and 24, while still maintaining a minimum depth that will capture

the overall signature of the alpha diversity metrics as seen by our rarefaction

plots.

Alternative Pipeline:

Newer methods are actively being developed that circumvent the need for rarefying by taking advantage of the compositional nature of microbiome data; we will show examples of these methods in subsequent sections. However, for some commonly used analysis tasks, no such solution yet exists.

Basic data exploration and diversity analyses¶

In the original ECAM study, in addition to monthly sampling, some participants were sampled multiple times in any given month. The exact day at which the samples were collected are recorded in the day_of_life column and again under the month column, with the values in the latter rounded to the nearest month. This rounding process allows us to easily compare samples that were collected at roughly the same month across groups, however it does introduce artificial replicates as multiple samples from the same participant will be recorded under the same month. To mitigate the appearance of these false replicates and ensure that samples meet assumptions of independence, we will filter our feature-table prior to group tests to include only one sample per subject per month. We have manually identified those samples that would be considered false replicates in rounding step under the column month_replicate and will use this to filter our table.

qiime feature-table filter-samples \

--i-table child-table.qza \

--m-metadata-file metadata.tsv \

--p-where "[month_replicate]='no'" \

--o-filtered-table child-table-norep.qza

Create a Visualization summary of this new table as before:

qiime feature-table summarize \

--i-table child-table-norep.qza \

--m-sample-metadata-file metadata.tsv \

--o-visualization child-table-norep.qzv

We are now ready to explore our microbial communities. One simple method to visualize the taxonomic composition of samples is to visualize them individually as stacked barplots. We can do this easily by providing our feature-table, taxonomy assignments, and our sample metadata file to the taxa plugin’s barplot action.

Generate the taxonomic barplot by running:

qiime taxa barplot \

--i-table child-table-norep.qza \

--i-taxonomy bespoke-taxonomy.qza \

--m-metadata-file metadata.tsv \

--o-visualization child-bar-plots.qzv

This barplot (Figure 7) shows the relative frequency of features in each sample, where you can choose the taxonomic level to display, and sort the samples by a sample metadata category or taxonomic abundance in an ascending or descending order. You can also highlight a specific feature in the barplot by clicking it in the legend. The snapshot above shows a barplot at the phylum level (level 2) where samples were sorted by day. Three phyla were highlighted to show that Proteobacteria (grey) dominate at birth but by 6 months of age the relative abundance of Bacteroidetes (green) and Firmicutes (purple) make up the majority of the community.

While barplots can be informative with regards to the composition of our microbial communities, they are hard to disentangle meaningful signals from noises.

Many microbial ecology studies use alpha diversity (within-sample richness and/or evenness) and beta diversity (between-sample dissimilarity) to reveal patterns in the microbial diversity in a set of samples. QIIME 2’s diversity analyses are available through the q2-diversity plugin, which computes a range of alpha and beta diversity metrics, applies related statistical tests, and generates interactive visualizations. The diversity metrics used in any given study should be based on the overall goals of the experiment. For a list of available diversity metrics in QIIME 2 and a brief description of the motivation behind them, we recommend reviewing the following tutorial: https://forum.qiime2.org/t/alpha-and-beta-diversity-explanations-and-commands.

In this tutorial we’ll utilize the pipeline action core-metrics-phylogenetic,

which simultaneously rarefies a FeatureTable[Frequency] to a user-specified

depth, computes several commonly used alpha and beta diversity metrics, and

generates principal coordinates analysis (PCoA) plots using the EMPeror

visualization tool (V‡zquez-Baeza, Pirrung, Gonzalez, & Knight, 2013) for each

of the beta diversity metrics. For this tutorial, we’ll use a sampling depth of

3,400 as determined from the previous step.

Compute alpha and beta diversity by entering the following commands, minding the

--p-n-jobsoption if multi-core usage is desired:

qiime diversity core-metrics-phylogenetic \

--i-table child-table-norep.qza \

--i-phylogeny insertion-tree.qza \

--p-sampling-depth 3400 \

--m-metadata-file metadata.tsv \

--p-n-jobs 4 \

--output-dir child-norep-core-metrics-results

Output artifacts:

child-norep-core-metrics-results/unweighted_unifrac_distance_matrix.qza: view | downloadchild-norep-core-metrics-results/unweighted_unifrac_pcoa_results.qza: view | downloadchild-norep-core-metrics-results/rarefied_table.qza: view | downloadchild-norep-core-metrics-results/observed_otus_vector.qza: view | downloadchild-norep-core-metrics-results/bray_curtis_distance_matrix.qza: view | downloadchild-norep-core-metrics-results/jaccard_pcoa_results.qza: view | downloadchild-norep-core-metrics-results/weighted_unifrac_distance_matrix.qza: view | downloadchild-norep-core-metrics-results/jaccard_distance_matrix.qza: view | downloadchild-norep-core-metrics-results/weighted_unifrac_pcoa_results.qza: view | downloadchild-norep-core-metrics-results/evenness_vector.qza: view | downloadchild-norep-core-metrics-results/faith_pd_vector.qza: view | downloadchild-norep-core-metrics-results/bray_curtis_pcoa_results.qza: view | downloadchild-norep-core-metrics-results/shannon_vector.qza: view | download

Output visualizations:

child-norep-core-metrics-results/weighted_unifrac_emperor.qzv: view | downloadchild-norep-core-metrics-results/jaccard_emperor.qzv: view | downloadchild-norep-core-metrics-results/bray_curtis_emperor.qzv: view | downloadchild-norep-core-metrics-results/unweighted_unifrac_emperor.qzv: view | download

By default, the following metrics are computed by this pipeline and stored within the child-core-metrics-results directory.

Alpha diversity metrics¶

Shannon’s diversity index (a quantitative measure of community richness) (Shannon & Weaver, 1949)

Observed features (a quantitative measure of community richness, called “observed OTUs” here for historical reasons);

Evenness (or Pielou’s Evenness; a measure of community evenness) (Pielou, 1966);

Faith’s Phylogenetic Diversity (a qualitative measure of community richness that incorporates phylogenetic relationships between the features) (Faith, 1992); this metric is sometimes referred to as PD_whole_tree, but we discourage the use of that name in favor of Faith’s Phylogenetic Diversity or Faith’s PD.

Beta diversity metrics¶

Jaccard distance (a qualitative measure of community dissimilarity) (P. Jaccard, 1908);

Bray-Curtis distance (a quantitative measure of community dissimilarity) (Sørensen, 1948);

unweighted UniFrac distance (a qualitative measure of community dissimilarity that incorporates phylogenetic relationships between the features) (C. Lozupone & Knight, 2005); Implementation based on Striped UniFrac (McDonald et al., 2018) method.

weighted UniFrac distance (a quantitative measure of community dissimilarity that incorporates phylogenetic relationships between the features) (C. A. Lozupone, Hamady, Kelley, & Knight, 2007); Implementation based on Striped UniFrac (McDonald et al., 2018) method.

After computing the core diversity metrics, we can begin to explore the microbial composition of the samples in the context of their metadata.

Performing statistical tests on diversity and generating interactive visualizations¶

Alpha diversity¶

We will first test for associations between our categorical metadata columns

and alpha diversity. Alpha diversity asks about the distribution of features

within each sample, and once calculated for all samples can be used to test

whether the per-sample diversity differs across different conditions (e.g.

samples obtained at different ages). The comparison makes no assumptions about

the features that are shared between samples; two samples can have the same

alpha diversity and not share any features. The rarefied

SampleData[AlphaDiversity] artifact produced in the above step contains

univariate, continuous values and can be tested using common non-parametric

statistical test (e.g. Kruskal-Wallis test) with the following command:

qiime diversity alpha-group-significance \

--i-alpha-diversity child-norep-core-metrics-results/shannon_vector.qza \

--m-metadata-file metadata.tsv \

--o-visualization child-norep-core-metrics-results/shannon-group-significance.qzv

Output visualizations:

Load the newly created shannon-group-significance.qzv Visualization.

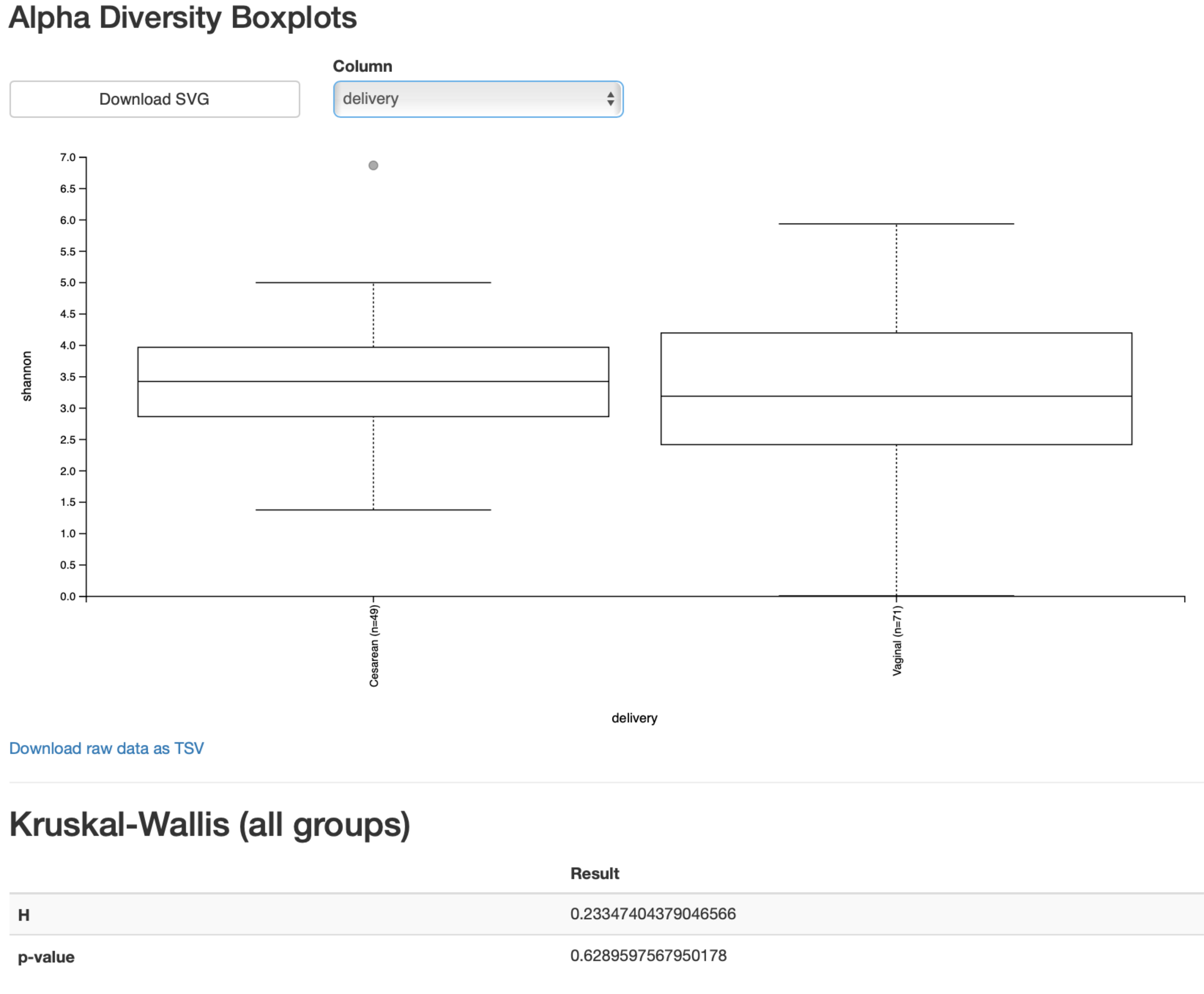

From the boxplots and Kurskal-Wallis test results (Figure 8), it appears that there are no differences between the child samples in terms of Shannon H diversity when mode of delivery is considered (p-value = 0.63). However, exposure to antibiotics appears to be associated with higher diversity (p-value = 0.026). What are the biological implications?

One important confounding factor here is that we are simultaneously analyzing our samples across all time-points and in doing so potentially losing meaningful signals at a particular time-point. Importantly, having more than one time point per subject also violates the assumption of the Kurskal-Wallis test that all samples are independent. More appropriate methods that take into account repeated measurements from the same samples are demonstrated in the longitudinal data analysis section below. It is important to note that QIIME 2 is not able to detect that: you must always be knowledgeable about the assumptions of the statistical tests that you are applying, and whether they are applicable to your data. These types of questions are common on the QIIME 2 Forum, so if you are unsure start by searching for your question on the forum, and posting your own question if you do not find a pre-existing answer.

So let’s re-analyze our data at the final (month 24) timepoint, by filtering our feature-table again:

qiime feature-table filter-samples \

--i-table child-table-norep.qza \

--m-metadata-file metadata.tsv \

--p-where "[month]='24'" \

--o-filtered-table table-norep-C24.qza

Next, we’ll re-run the core-metrics-phylogenetic pipeline. Visualize the summary of this new table and select a new sampling depth as shown in the previous section. Re-run core-metrics-phylogenetic:

qiime diversity core-metrics-phylogenetic \

--i-table table-norep-C24.qza \

--i-phylogeny insertion-tree.qza \

--p-sampling-depth 3400 \

--m-metadata-file metadata.tsv \

--p-n-jobs 4 \

--output-dir norep-C24-core-metrics-results

Output artifacts:

norep-C24-core-metrics-results/unweighted_unifrac_distance_matrix.qza: view | downloadnorep-C24-core-metrics-results/unweighted_unifrac_pcoa_results.qza: view | downloadnorep-C24-core-metrics-results/rarefied_table.qza: view | downloadnorep-C24-core-metrics-results/observed_otus_vector.qza: view | downloadnorep-C24-core-metrics-results/bray_curtis_distance_matrix.qza: view | downloadnorep-C24-core-metrics-results/jaccard_pcoa_results.qza: view | downloadnorep-C24-core-metrics-results/weighted_unifrac_distance_matrix.qza: view | downloadnorep-C24-core-metrics-results/jaccard_distance_matrix.qza: view | downloadnorep-C24-core-metrics-results/weighted_unifrac_pcoa_results.qza: view | downloadnorep-C24-core-metrics-results/evenness_vector.qza: view | downloadnorep-C24-core-metrics-results/faith_pd_vector.qza: view | downloadnorep-C24-core-metrics-results/bray_curtis_pcoa_results.qza: view | downloadnorep-C24-core-metrics-results/shannon_vector.qza: view | download

Output visualizations:

And finally, run the alpha-group-significance action again:

qiime diversity alpha-group-significance \

--i-alpha-diversity norep-C24-core-metrics-results/shannon_vector.qza \

--m-metadata-file metadata.tsv \

--o-visualization norep-C24-core-metrics-results/shannon-group-significance.qzv

Output visualizations:

Load this new Visualization.

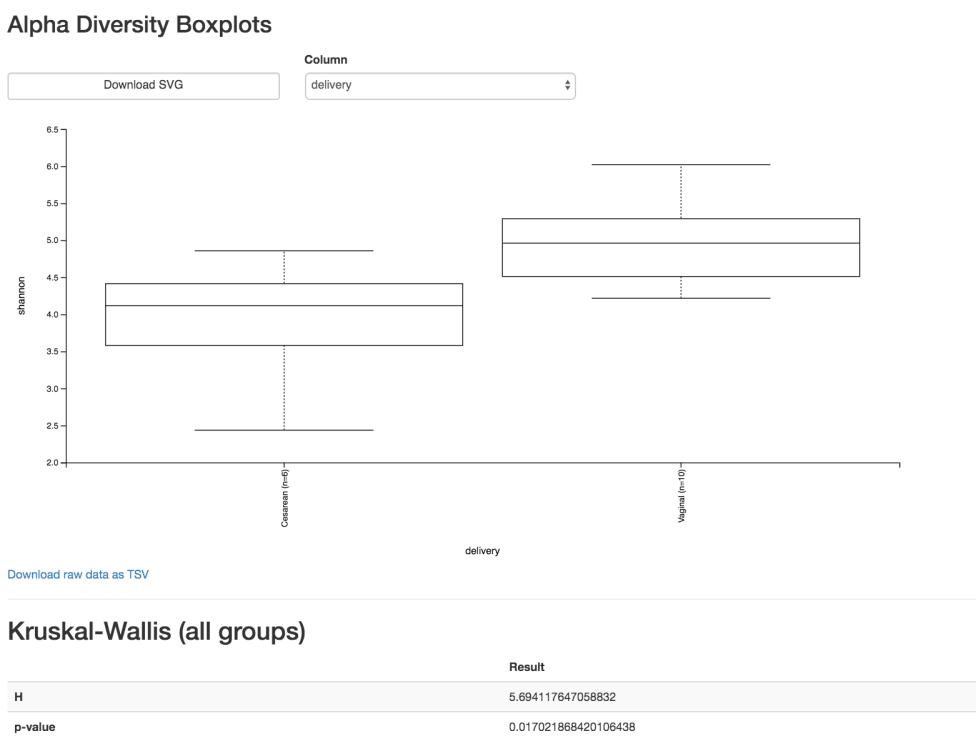

We can see now that at month 24, vaginal birth appears to be associated with a higher Shannon value than cesarean birth (p-value = 0.02, Figure 9), while antibiotic exposure is no longer associated with differences in Shannon diversity (p-value = 0.87).

Beta diversity¶

Next, we’ll compare the structure of the microbiome communities using beta

diversity. We start by making a visual inspection of the principal coordinates

plots (PCoA) plots that were generated in the previous step. Load the

unweighted_unifrac_emperor.qzv Visualization from the

norep-C24-core-metrics-results folder.

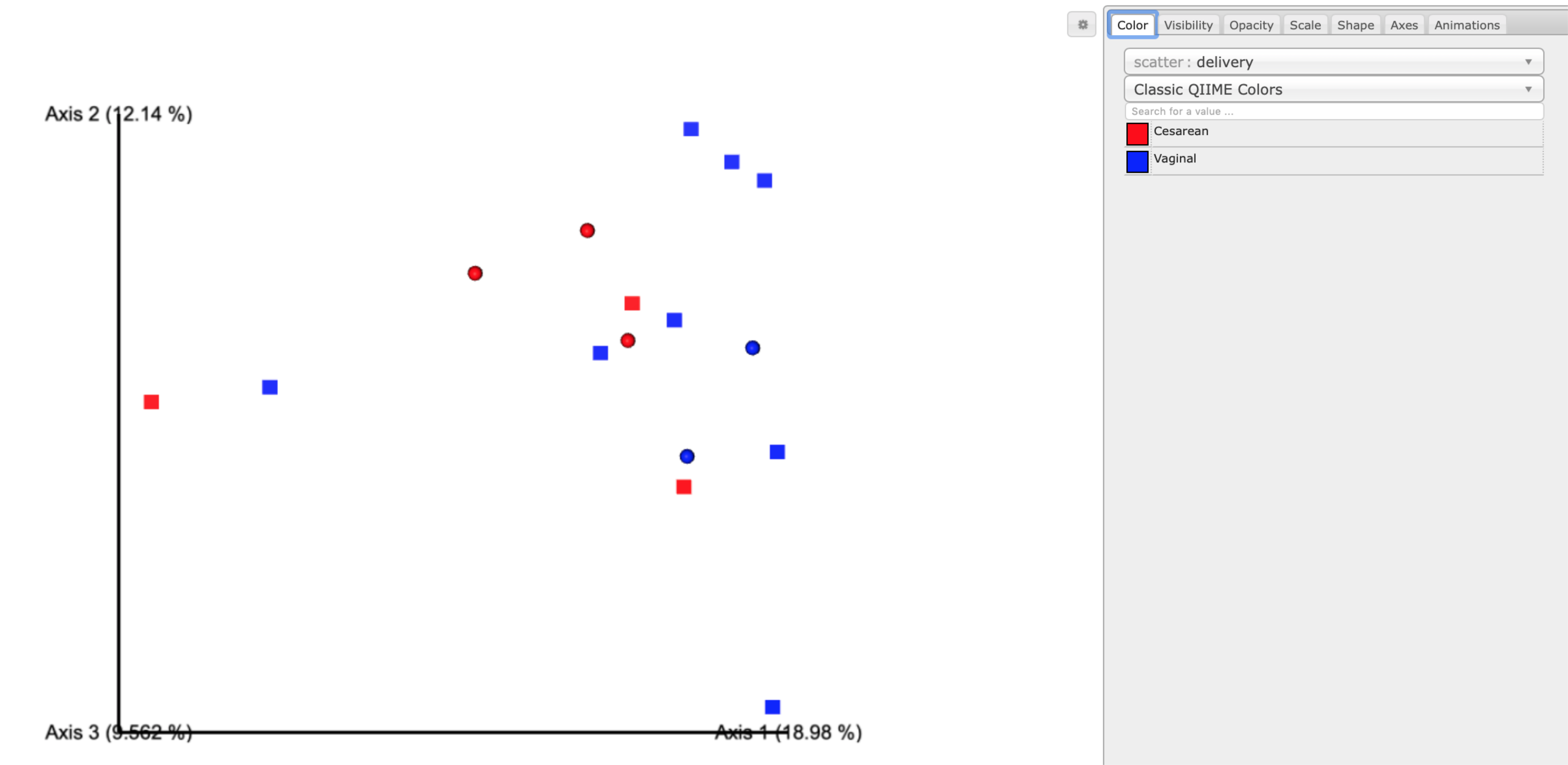

Each dot in the PCoA plot (Figure 10) represents a sample, and users can color them according to their metadata category of interest and rotate the 3D figure to see whether there is a clear separation in beta diversity driven by these covariates. Moreover, users can customize their figures using existing drop-down menus by hiding certain samples in ‘Visibility’, changing the brightness of dots in ‘Opacity’, controlling their size in ‘Scale’, choosing different shapes for samples in ‘Shape’, modifying the color of axes and background in ‘Axes’ and creating a moving picture under the ‘Animations’ tabs.

Alternative Pipeline:

Visualizing Longitudinal Variation with Emperor. For longitudinal studies, we’ve found great use in visualizing temporal variability using animated traces in Emperor. By doing this, you can follow the longitudinal dynamics sample by sample and subject by subject. In order to do so, you need two metadata categories one to order the samples (Gradient category) and one to group the samples (Trajectory category). For this dataset we can use the animations_gradient as the category that orders the samples, and the animations_subject as the category that groups our samples.

The values in animations_gradient represent the age in months. In this category samples with no longitudinal data are set to 0, note that all values have to be numeric in order for the animation to be displayed. The animations_subject category includes unique identifiers for each subject. Put together, these two categories will result in animated traces on a per-individual basis.

In Emperor’s user interface, go to the ‘Animations’ tab, and select animations_gradient under the Gradient menu and select animations_subject under the Trajectory menu. Then click ‘play’, you’ll see animated traces moving on the plot. You can adjust the speed and the radius of the trajectories. To start over, click on the ‘back’ button. Using the ECAM dataset, we have generated an animation visualizing the temporal trajectories of one vaginal born and one cesarean baby in the 3D PCoA plot. This animation is available at https://raw.githubusercontent.com/qiime2/paper2/master/sphinx_docs/_static/animation.mov

For more information about animated ordinations, visit Emperor’s online tutorial at https://biocore.github.io/emperor/build/html/tutorials/animations.html.

When we color the samples by delivery mode and change the shape of male infants to squares, no obvious clusters are observed. There may be a general trend towards vaginal birth children separating from cesarean birth samples along Axis 1, which would suggest that microbial composition of cesarean born children are phylogenetically more related within their own groups than that of the vaginal birth group. However, given the low sample size in the cesarean group, we are likely underpowered to detect these changes statistically. Nevertheless, we can test our hypothesis using a PERMANOVA, which tests the hypothesis that distances between samples within one group (within group distances) differ from the distances to samples in another group (across group distances). Other relevant tests in QIIME 2 exist, such as ANOSIM, PERMDISP, or the Mantel test; the choice of test should be carefully considered with regards to the biological question at hand, see Anderson and Walsh (2013) for an overview of these tests (Anderson & Walsh, 2013). It is also important to note that these tests are useful when testing pre-existing hypotheses about your data, but cannot be used for testing new hypotheses that were generated by looking at PCoA results. New hypotheses must unfortunately be tested with new, independent data. Here, we perform the PERMANOVA test with the following command:

qiime diversity beta-group-significance \

--i-distance-matrix norep-C24-core-metrics-results/unweighted_unifrac_distance_matrix.qza \

--m-metadata-file metadata.tsv \

--m-metadata-column delivery \

--p-pairwise \

--o-visualization norep-C24-core-metrics-results/uw_unifrac-delivery-significance.qzv

Output visualizations:

Load the Visualization.

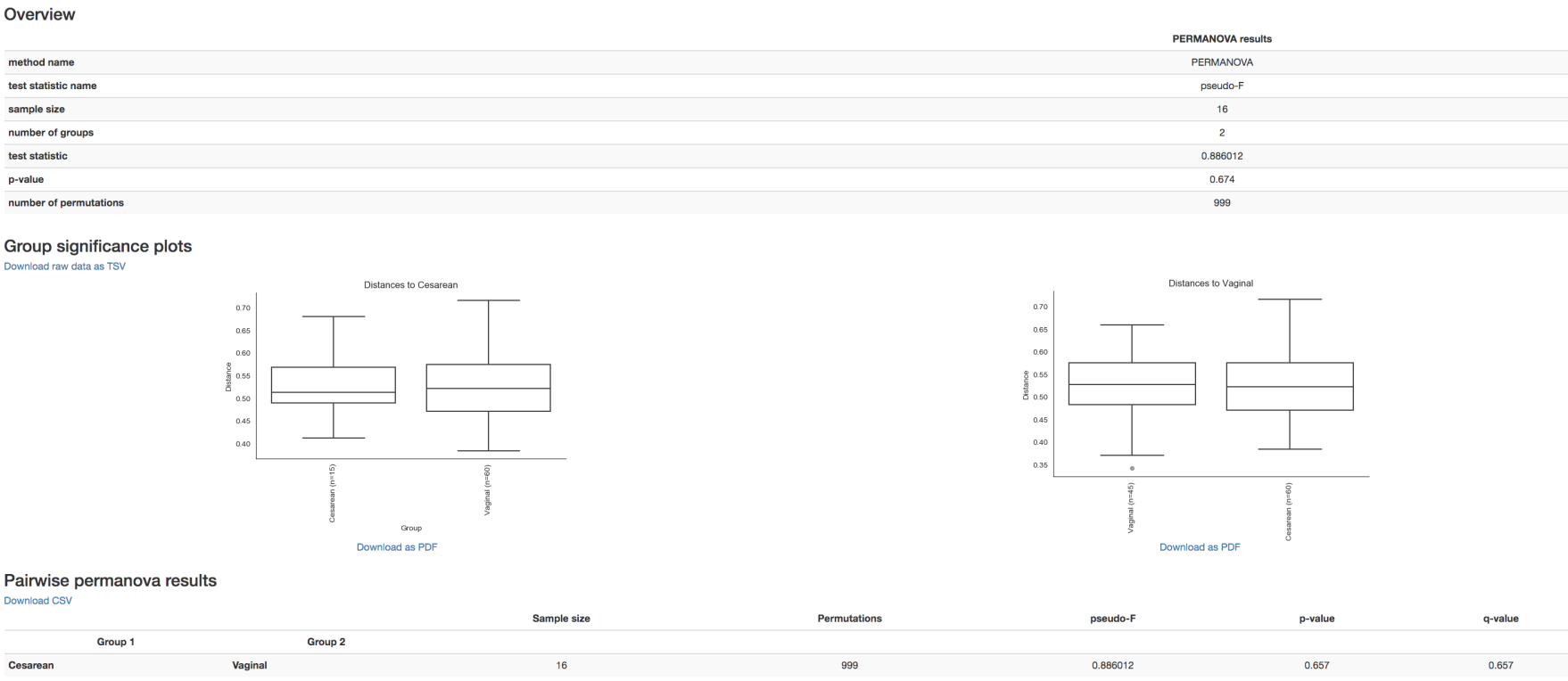

The overview statistics (Figure 11) provide us the parameters used in the PERMANOVA test and the resulting values of test statistic and p-value. The boxplots show the pairwise distance between cesarean and vaginal birth. Lastly, the table (in Figure 11) summarizes the results from PERMANOVA and gives an additional q-value (adjusted p-value for multiple testing). The PERMANOVA test confirms our initial assessment that vaginal borns microbial communities are not statistically different from cesarean born communities in beta diversity (as represented by unweighted UniFrac distances) at month 24 (p-value = 0.38). These results however should be interpreted cautiously given the limited sample size in this dataset. We would conclude that further experiments would be needed to confirm our findings.

Alternative Pipeline:

The beta diversity analysis above was carried on a rarefied subset of our data. An alternative method that does not require rarefying is offered through the external q2-deicode plugin (https://library.qiime2.org/plugins/deicode). DEICODE is a form of Aitchison Distance that is robust to compositional data with high levels of sparsity (Martino et al., 2019). This plugin can be used to generate a beta diversity ordination artifact which can easily be utilized with the existing architecture in QIIME 2 such as visualization with q2-emperor and hypothesis testing with the beta-group-significance as above.

Longitudinal data analysis¶

When microbial data is collected at different timepoints, it is useful to examine dynamic changes in the microbial communities (longitudinal analysis). This section is devoted to longitudinal microbiome analysis using the q2-longitudinal plugin (Bokulich, Dillon, Zhang, et al., 2018). This plugin can perform a number of analyses such as: visualization using volatility plots, testing temporal trends in alpha and beta diversities, using linear mixed effects models to test for changes in diversity metrics or individual features with regards to metadata categories of interest, and more. A comprehensive list of available methods and instructions on how to perform them are available in the online tutorial: https://docs.qiime2.org/2019.10/tutorials/longitudinal/. Here we will demonstrate some of these methods.

Linear mixed effects (LME) models¶

In a previous section we determined that Shannon diversity was significantly lower in cesarean born children at 24 months of age. But what about the change in Shannon diversity throughout the 24 months. LME models enable us to test the relationship between a single response variable (i.e. Shannon metric) and one or more independent variables (ex. delivery mode, diet), where observations are made across dependent samples, e.g., in repeated-measures sampling experiments. LME models can also account for a random effect (ex. individuals, sampling times etc.) variable. Here we will use the linear-mixed-effects action which requires the following inputs: the diversity metric of choice calculated for all samples across 24 months (in the child-core-metrics-results folder), the metric name, our sample metadata file, a comma separated list of covariates to include in the model, the random effect variable (day_of_life), the column name from the metadata file containing the numeric state (i.e day_of_life), as well as the column name from the metadata file containing the individuals’ ID names to track through time. Unlike the group significant tests in the previous steps, LME models can handle continuous variables, therefore, we will utilize our full dataset by calling on the day_of_life column instead of month. We’ll need to calculate our diversity metrics again on the full dataset before replicates were removed:

qiime diversity core-metrics-phylogenetic \

--i-table child-table.qza \

--i-phylogeny insertion-tree.qza \

--p-sampling-depth 3400 \

--m-metadata-file metadata.tsv \

--p-n-jobs 4 \

--output-dir child-core-metrics-results

Output artifacts:

child-core-metrics-results/unweighted_unifrac_distance_matrix.qza: view | downloadchild-core-metrics-results/unweighted_unifrac_pcoa_results.qza: view | downloadchild-core-metrics-results/rarefied_table.qza: view | downloadchild-core-metrics-results/observed_otus_vector.qza: view | downloadchild-core-metrics-results/bray_curtis_distance_matrix.qza: view | downloadchild-core-metrics-results/jaccard_pcoa_results.qza: view | downloadchild-core-metrics-results/weighted_unifrac_distance_matrix.qza: view | downloadchild-core-metrics-results/jaccard_distance_matrix.qza: view | downloadchild-core-metrics-results/weighted_unifrac_pcoa_results.qza: view | downloadchild-core-metrics-results/evenness_vector.qza: view | downloadchild-core-metrics-results/faith_pd_vector.qza: view | downloadchild-core-metrics-results/bray_curtis_pcoa_results.qza: view | downloadchild-core-metrics-results/shannon_vector.qza: view | download

Output visualizations:

To demonstrate how covariates can be included in an LME model, here we will test the effects of delivery method and diet (predominantly breast-fed versus predominantly formula-fed during the first 3 months of life) simultaneously using the following:

qiime longitudinal linear-mixed-effects \

--m-metadata-file metadata.tsv \

--m-metadata-file child-core-metrics-results/shannon_vector.qza \

--p-metric shannon \

--p-random-effects day_of_life \

--p-group-columns delivery,diet \

--p-state-column day_of_life \

--p-individual-id-column host_subject_id \

--o-visualization lme-shannon.qzv

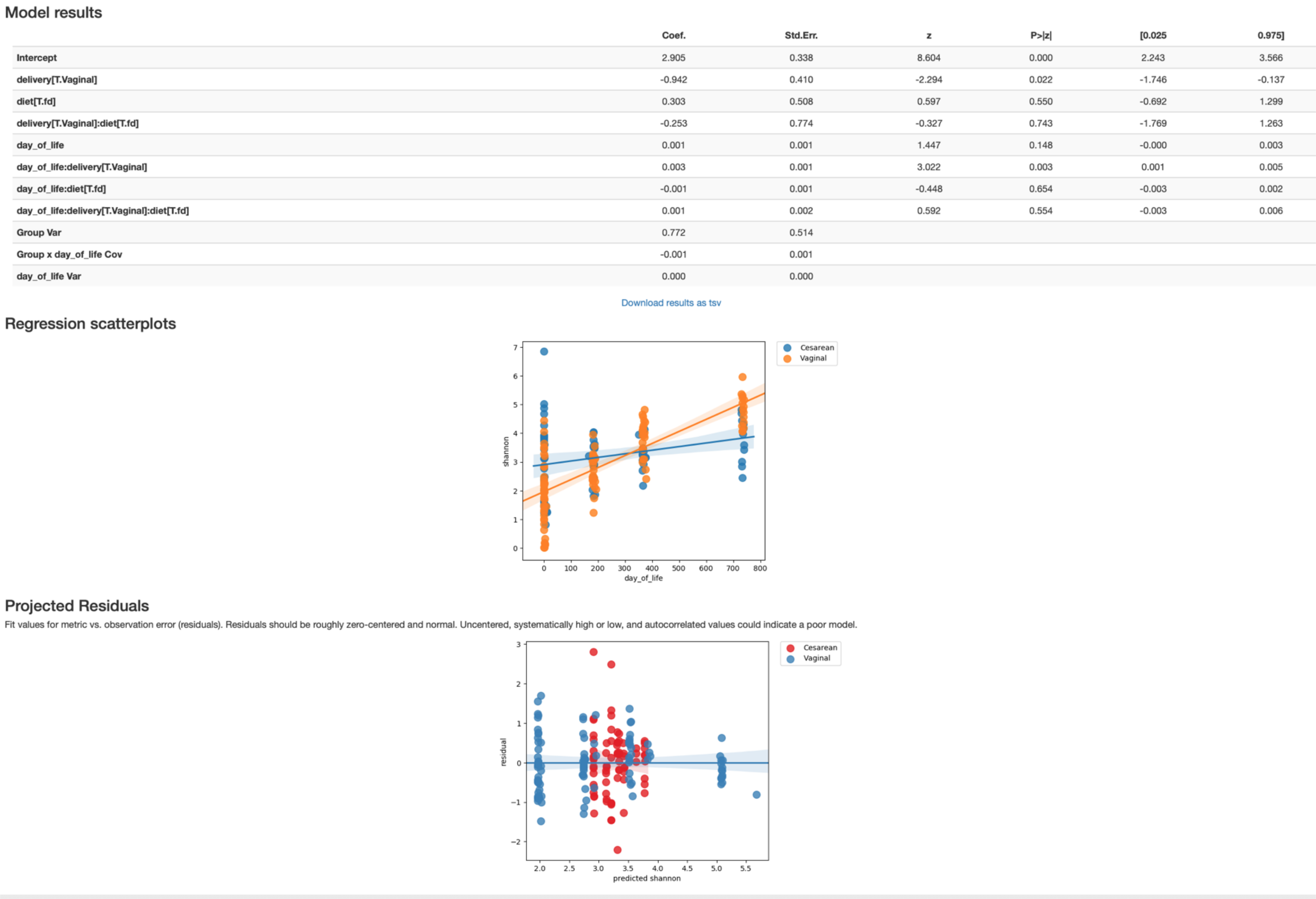

In this Visualization (Figure 12), the model results provide all the outputs from the LME model, where we see a significant birth mode effect in Shannon diversity over time (p-value = 0.016), while the diet has no bearing in Shannon diversity across time (p-value = 0.471). The regression scatterplots (top) overlap the predicted group mean trajectories on the observed data (dots), and the projected residuals plot (bottom) can help users to check the validity of an LME model. For more details, see https://docs.qiime2.org/2019.10/tutorials/longitudinal/.

Volatility visualization¶

The volatility visualizer generates interactive line plots that allow us to assess how volatile a dependent variable is over a continuous, independent variable (e.g., time) in one or more groups. Multiple metadata files (including alpha and beta diversity) and feature tables can be used as input, and in the interactive visualization we can select different dependent variables to plot on the y-axis. Here we examine how variance in Shannon diversity changes across time in our cohort, both in groups of samples (interactively selected) and in individual subjects.

The volatility plot can be generated by running:

qiime longitudinal volatility \

--m-metadata-file metadata.tsv \

--m-metadata-file child-core-metrics-results/shannon_vector.qza \

--p-default-metric shannon \

--p-default-group-column delivery \

--p-state-column month \

--p-individual-id-column host_subject_id \

--o-visualization shannon-volatility.qzv

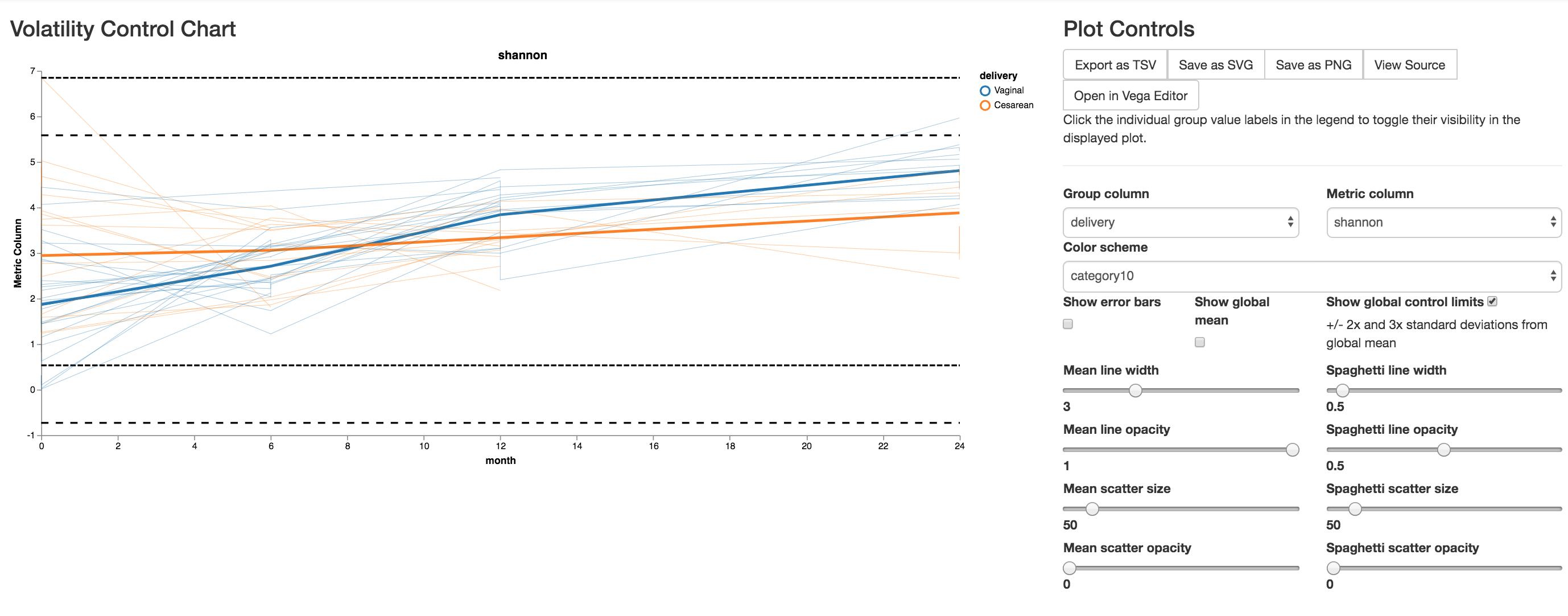

The volatility plot (Figure 13) shows the mean curve of each group in selected group column on top of individual trajectories over time. This plot can be useful in identifying outliers qualitatively, by turning on ‘show global control limits’ to show +/- 2x and 3x standard deviation lines from global mean. Observations above those global control limits are susceptible to be outliers. In this analysis, we see high variance at time zero, while they become more similar by month 8, and by month 24, vaginally-born children appear to be higher than cesarean-born (as expected).

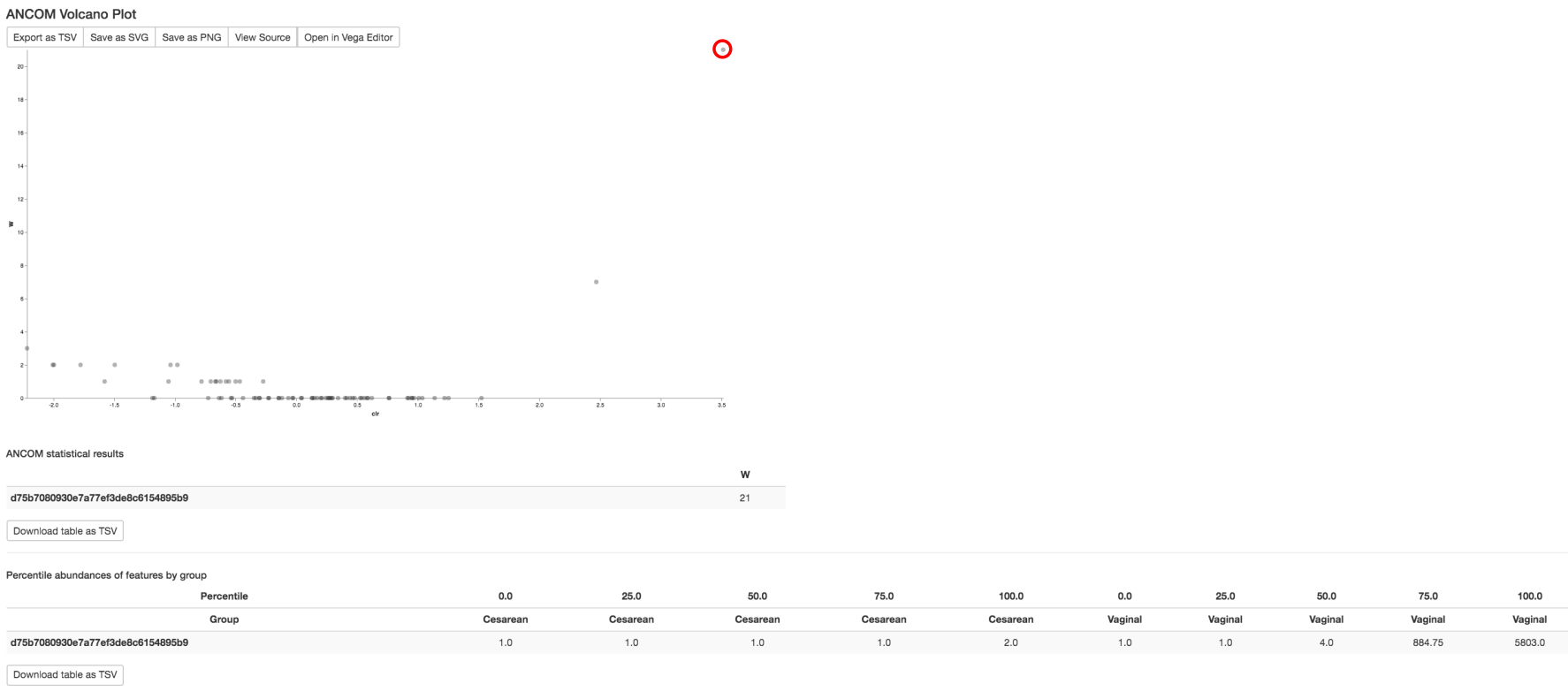

Differential abundance testing¶